How Particle Swarm Optimization Works: A Step-by-Step Guide

Keywords are the way we “mere mortals” optimize for what we know people use in search.

The machines that run the results and ranking rely on algorithm search ranking factors.

Fortunately for the humans trying to solve a problem only machines can calculate, Market Brew built a platform to predict and optimize all 35 search ranking factors, and with the help of AI we did this across 1500+ different dimensions!

One of the core pillars of this effort is “Particle Swarm Optimization” (PSO) is a widely-used optimization algorithm beyond just search and machines, it is inspired by the behavior of social animals such as birds or fish.

In this article, we aim to provide an overview of the key concepts and principles behind PSO, as well as highlight some of its main advantages and limitations. To do so, we will address a series of ten questions that cover different aspects of the algorithm, such as its basic principles, main parameters, and convergence properties.

By answering these questions, we hope to provide a comprehensive introduction to PSO and help readers understand how it can be applied to various optimization tasks.

Optimization algorithms are a vital tool in many fields, from machine learning and data science to engineering and finance. They allow us to find the best solution to a given problem by searching through a space of possible solutions and selecting the one that maximizes or minimizes a particular objective function. Particle swarm optimization (PSO) is one such optimization algorithm that has gained popularity due to its simplicity, flexibility, and robustness.

PSO is an iterative optimization technique that was inspired by the behavior of social animals such as birds or fish. It involves a group of particles, or agents, that move through a search space and try to find the optimal solution to a given problem. Each particle is guided by its own experience and the experience of the other particles in the group, and the movement of the particles is determined by a set of rules that are based on the particle's current position, velocity, and the best position it has encountered so far.

In this article, we will delve into the key concepts and principles behind PSO, and address a series of ten questions that cover different aspects of the algorithm. By answering these questions, we hope to provide a comprehensive introduction to PSO and help readers understand how it can be applied to various optimization tasks.

What Is the Basic Principle Behind Particle Swarm Optimization (PSO)?

Particle Swarm Optimization (PSO) is a computational method used to find the optimal solution to a problem. It is based on the idea of simulating the social behavior of a group of birds or insects, known as a swarm, searching for food.

In PSO, a group of particles is used to represent potential solutions to a problem. Each particle has a position in the search space, which corresponds to a potential solution to the problem. The particles also have a velocity, which determines how they move within the search space.

The basic principle behind PSO is to use the social behavior of the swarm to guide the search for the optimal solution. Each particle in the swarm is influenced by the current position of the other particles, as well as its own personal best position.

The personal best position of a particle is the best solution that it has found so far. The global best position is the best solution found by any particle in the swarm. The particles move towards the global best position, as well as towards their own personal best position, in order to find the optimal solution to the problem.

One of the key features of PSO is that it is a population-based optimization algorithm, which means that it uses a group of potential solutions instead of just one. This allows the algorithm to explore a larger area of the search space and find a more diverse set of solutions.

Another important aspect of PSO is that it is a stochastic optimization algorithm, which means that it uses randomness in its search process. This can help the algorithm to escape local optima and explore different areas of the search space.

In summary, the basic principle behind PSO is to use the social behavior of a swarm of particles to guide the search for the optimal solution to a problem. The particles are influenced by the global and personal best positions, and use their velocity to move within the search space. The algorithm is population-based and stochastic, which allows it to explore a larger area of the search space and find a more diverse set of solutions.

How Does PSO Differ From Other Optimization Algorithms, Such as Gradient Descent or Genetic Algorithms?

Particle Swarm Optimization (PSO) is a heuristic optimization algorithm that is inspired by the social behavior of animals, particularly birds. It was first introduced by Kennedy and Eberhart in 1995 as a way to solve optimization problems in continuous search spaces. Since then, it has gained widespread popularity and has been applied to a wide range of applications, including machine learning, engineering design, and finance.

One key way in which PSO differs from other optimization algorithms is its population-based approach. Instead of using a single point to search for the optimal solution, as is the case with gradient descent or steepest descent algorithms, PSO uses a group of points, or particles, that move through the search space in search of the optimal solution. These particles are initialized randomly within the search space and are then updated using a combination of their own past performance and the performance of the best particle in the population. This allows PSO to explore a larger portion of the search space, increasing the chances of finding the optimal solution.

Another key difference between PSO and other optimization algorithms is its lack of need for gradient information. Many optimization algorithms, such as gradient descent, rely on the gradient of the objective function to guide the search process. However, PSO does not require gradient information and can be used to optimize functions that are not differentiable or have complex gradients. This makes it well-suited for optimization problems where gradient information is not available or is too expensive to compute.

A third way in which PSO differs from other optimization algorithms is its use of a personal best and global best position. Each particle in the population maintains a record of its personal best position, which is the position where it has achieved the highest performance in the past. The global best position is the position where the best particle in the population has achieved the highest performance. These best positions are used to update the particles' positions during the optimization process, allowing them to be guided towards better solutions.

In contrast, genetic algorithms use a different approach to optimization. They are based on the principle of natural selection, where the fittest individuals in a population are selected to reproduce and pass on their genetic traits to the next generation. This process is repeated over several generations until the optimal solution is found. Genetic algorithms are typically used for optimization problems with discrete search spaces and can be applied to a wide range of problems, including function optimization and scheduling.

One major difference between PSO and genetic algorithms is the way in which they explore the search space. While PSO uses a population of particles that move through the search space, genetic algorithms use a population of solutions that are modified through a process of crossover and mutation. This allows genetic algorithms to explore a larger portion of the search space, but it can also lead to a slower convergence to the optimal solution compared to PSO.

Another difference between PSO and genetic algorithms is their ability to handle complex search spaces. PSO is well-suited for optimization problems with continuous search spaces and can handle complex, non-differentiable functions. In contrast, genetic algorithms are better suited for optimization problems with discrete search spaces and may struggle with more complex functions.

Overall, PSO is a powerful optimization algorithm that is well-suited for optimization problems with continuous search spaces. It is easy to implement and does not require gradient information, making it a useful tool for solving a wide range of optimization problems. While it may not be as effective as some other algorithms in certain situations, it is a versatile tool that can be applied to a wide range of problems and has proven to be effective in many real-world applications.

What Are the Main Parameters Involved in PSO, and How Do They Affect the Performance of the Algorithm?

Particle Swarm Optimization (PSO) is a popular optimization algorithm that has been widely used in various fields including data mining, machine learning, and engineering. It is inspired by the behavior of a flock of birds searching for food in their environment. The algorithm works by simulating a group of particles that move around in a search space, with each particle representing a potential solution to the optimization problem. The main parameters involved in PSO include the number of particles, the velocity update equation, the inertia weight, the cognitive and social learning factors, and the swarm topology.

The number of particles in a PSO algorithm is an important parameter that determines the number of potential solutions that are explored in the search space. A larger number of particles will generally result in a more thorough exploration of the search space, which can increase the chances of finding a good solution. However, increasing the number of particles also increases the computational complexity of the algorithm, so there is a trade-off between the search efficiency and the computational cost.

The velocity update equation is another important parameter that determines the movement of the particles in the search space. The velocity of a particle at each iteration is calculated based on its current position, the best position it has ever reached (pbest), and the best position reached by any particle in the swarm (gbest). The velocity update equation also includes the inertia weight, which determines how much influence the current velocity of a particle has on its movement. A higher inertia weight will cause the particles to maintain their momentum and explore a wider area, while a lower inertia weight will make the particles more responsive to changes in the search space.

The cognitive and social learning factors are also important parameters in PSO. The cognitive learning factor, also known as the self-learning factor, determines the influence of the particle's pbest on its movement. A higher cognitive learning factor will cause the particle to be more influenced by its own past performance, while a lower cognitive learning factor will make the particle more responsive to changes in the search space. The social learning factor, also known as the swarm learning factor, determines the influence of the gbest on the movement of the particles. A higher social learning factor will cause the particles to be more influenced by the best position reached by any particle in the swarm, while a lower social learning factor will make the particles more responsive to changes in their local environment.

The swarm topology is another important parameter in PSO. The topology refers to the way the particles are connected and communicate with each other. In a fully connected topology, all particles communicate with every other particle in the swarm. This can result in a more efficient exploration of the search space, but it also increases the computational complexity of the algorithm. In a ring topology, the particles are connected in a circular fashion, with each particle communicating with its two nearest neighbors. This can result in a more localized exploration of the search space, but it may also limit the ability of the particles to explore more distant areas.

In summary, the main parameters involved in PSO include the number of particles, the velocity update equation, the inertia weight, the cognitive and social learning factors, and the swarm topology. These parameters all have an impact on the performance of the algorithm, with the number of particles and the velocity update equation being particularly important. The choice of these parameters will depend on the specific optimization problem being solved and the desired balance between search efficiency and computational cost.

How Is the Movement of Particles in the Swarm Determined in PSO?

Particle Swarm Optimization (PSO) is an optimization algorithm that is inspired by the behavior of swarms of animals, such as birds or bees, in nature.

In this algorithm, a set of particles is used to search for the optimal solution to a problem. These particles move through the search space and are guided by their own personal best solution, as well as the best solution found by the entire swarm.

The movement of particles in the swarm is determined by a set of mathematical equations that incorporate the current position of the particle, its personal best solution, and the global best solution. These equations are used to calculate the velocity and position of the particle at each iteration of the algorithm.

The first step in determining the movement of particles in the swarm is to define the position of the particle in the search space. This position is typically represented by a vector of real-valued numbers, and is used to encode the values of the variables that are being optimized.

Once the position of the particle has been defined, the algorithm must determine the velocity of the particle. This is done using the following equation:

Velocity(t+1) = w * Velocity(t) + c1 * rand(0,1) * (PersonalBest(t) - Position(t)) + c2 * rand(0,1) * (GlobalBest(t) - Position(t))

In this equation, w is a constant known as the inertia weight, which determines how much of the particle's previous velocity is carried over to the next iteration. The constants c1 and c2 are known as the acceleration constants, and they determine how much the particle is influenced by its personal best and global best solutions, respectively. The rand(0,1) function generates a random number between 0 and 1, which is used to introduce some element of randomness into the movement of the particle.

Once the velocity of the particle has been calculated, the algorithm can determine the new position of the particle using the following equation:

Position(t+1) = Position(t) + Velocity(t+1)

This equation simply adds the velocity of the particle to its current position, resulting in a new position in the search space.

In addition to these equations, the algorithm also includes a number of constraints that are used to ensure that the particles do not wander too far from the search space or get stuck in local optima. For example, the algorithm may include limits on the maximum velocity of the particles, or it may use a variety of techniques to prevent the particles from getting stuck in a particular region of the search space.

Overall, the movement of particles in the swarm is determined by a combination of the current position and velocity of the particle, as well as its personal best and global best solutions. These factors are used to guide the particle through the search space and help it find the optimal solution to the problem being solved. By adjusting the various parameters of the algorithm, such as the inertia weight and acceleration constants, it is possible to fine-tune the behavior of the particles in the swarm and improve the performance of the algorithm.

How Is the Global Best Solution Found in PSO, and How Is It Used to Guide the Search Process?

The global best solution in Particle Swarm Optimization (PSO) is found through the use of a swarm of particles that move through a search space to find the optimal solution to a problem.

Each particle represents a potential solution to the problem and is influenced by its own personal best solution as well as the global best solution found by the entire swarm.

The global best solution is determined by evaluating the fitness of each particle's solution and selecting the one with the highest score. This solution is then used to guide the search process by providing a target for the other particles to strive towards.

The search process in PSO begins with a group of randomly initialized particles placed in the search space. Each particle is assigned a velocity and position, and the optimization process begins by evaluating the fitness of each particle's solution. The fitness score is a measure of how well the solution fits the problem, with higher scores indicating a better fit.

Once the fitness of each particle's solution has been determined, the global best solution is updated to reflect the particle with the highest score. This global best solution is then used to guide the search process by providing a target for the other particles to strive towards.

The particles are then updated by adjusting their velocities and positions based on the influence of their personal best solution and the global best solution. The velocity of a particle is updated using the following formula:

Velocity(t+1) = w * Velocity(t) + c1 * rand() * (PersonalBest - Position(t)) + c2 * rand() * (GlobalBest - Position(t))

Where w is the inertia weight, c1 and c2 are acceleration constants, rand() is a random number between 0 and 1, PersonalBest is the particle's personal best solution, and GlobalBest is the global best solution.

The position of a particle is then updated using the following formula:

Position(t+1) = Position(t) + Velocity(t+1)

The above formulas are repeated for each particle in the swarm until the optimization process has reached a satisfactory solution or the maximum number of iterations has been reached.

One of the benefits of using PSO is that it can be easily parallelized, allowing for faster computation times when solving complex problems. Additionally, PSO is a robust optimization method that can handle problems with multiple local optima and does not require the calculation of gradients, making it well suited for problems with noisy or discontinuous data.

Overall, the global best solution in PSO is used to guide the search process by providing a target for the particles to strive towards. By continuously updating the velocities and positions of the particles based on the influence of the personal best and global best solutions, the swarm is able to effectively navigate the search space and find the optimal solution to the problem.

Can PSO Be Used to Solve Both Continuous and Discrete Optimization Problems?

Particle Swarm Optimization (PSO) is a computational method that is commonly used to solve optimization problems. It was first introduced in 1995 by James Kennedy and Russell Eberhart, and it has since been widely adopted by researchers in various fields due to its simplicity and effectiveness.

PSO is based on the idea of simulating the behavior of a group of birds or insects as they search for food or a suitable place to settle.

One of the key characteristics of PSO is that it is a population-based optimization algorithm. This means that it works by maintaining a population of candidate solutions, and it iteratively improves these solutions by updating their positions based on the experiences of the entire population. PSO uses a simple mathematical model to simulate the behavior of the particles, and it relies on the interactions between the particles to find good solutions to the optimization problem.

One of the main advantages of PSO is that it can be used to solve a wide range of optimization problems, including both continuous and discrete optimization problems. Continuous optimization problems are those in which the variables are real numbers and can take any value within a given range. Discrete optimization problems are those in which the variables are integers or binary values and can only take specific values.

In the case of continuous optimization problems, PSO can be used to find the optimal values of the variables that minimize or maximize a given objective function. The objective function is a mathematical expression that defines the problem to be solved, and it depends on the variables and the constraints of the problem. For example, in a problem of minimizing the cost of producing a product, the objective function could be a function of the number of units produced, the cost of labor, and the cost of materials.

To solve a continuous optimization problem using PSO, the algorithm starts by initializing a population of particles, each of which represents a candidate solution. The position of each particle is initialized to a random value within the given range of the variables. The algorithm then iteratively updates the positions of the particles based on the experience of the entire population.

At each iteration, each particle is updated by considering two factors: its own best solution (pbest) and the best solution found by the entire population (gbest). The pbest is the best solution that the particle has found so far, and the gbest is the best solution found by any particle in the population. The particle is then moved towards the pbest and gbest positions, using a mathematical formula that combines these two factors with the current position of the particle.

The process of updating the positions of the particles continues until a satisfactory solution is found or a predetermined number of iterations is reached. The final position of the particles represents the optimal solution to the problem.

In the case of discrete optimization problems, PSO can also be used to find the optimal values of the variables. However, in this case, the algorithm needs to be adapted to handle the discrete nature of the variables. One way to do this is by using a variant of PSO called Binary Particle Swarm Optimization (BPSO).

BPSO is similar to standard PSO, but it uses a binary representation of the variables instead of a continuous representation. In other words, each particle is represented by a string of binary digits, and the position of the particle corresponds to the values of these digits. The algorithm works by updating the binary values of the particles based on the experience of the entire population, just like in standard PSO.

To solve a discrete optimization problem using BPSO, the algorithm starts by initializing a population of particles, each of which represents a candidate solution. The position of each particle is initialized to a random binary value within the given range of the variables. The algorithm then iteratively updates the positions of the particles based on the pbest and gbest values, just like in standard PSO.

One of the main advantages of using BPSO to solve discrete optimization problems is that it can handle problems with a large number of variables and constraints. This is because the binary representation of the variables allows for a more compact representation of the solution space, making it easier for the algorithm to search for good solutions.

In conclusion, PSO can be used to solve both continuous and discrete optimization problems, albeit with some slight adaptations in the case of discrete optimization. It is a simple and effective optimization algorithm that has been widely adopted by researchers in various fields due to its ability to find good solutions in a relatively short time. Whether the problem is continuous or discrete, PSO can be a useful tool to have in the optimization toolkit.

How Is the Convergence of the Algorithm Determined in PSO?

The convergence of an algorithm in particle swarm optimization (PSO) is determined by how well the algorithm is able to find the optimal solution to a problem.

This can be measured in a number of different ways, including the number of iterations required to reach the optimal solution, the accuracy of the solution, and the speed at which the solution is found.

One way to determine the convergence of the algorithm is by examining the error between the current solution and the optimal solution. As the algorithm iterates through the problem space, it should be able to find increasingly better solutions with each iteration. If the error between the current solution and the optimal solution is decreasing over time, then this suggests that the algorithm is converging on the optimal solution.

Another way to determine the convergence of the algorithm is by examining the diversity of the particle population. In PSO, the particle population is made up of individual particles that each represent a potential solution to the problem. If the particles in the population are highly diverse, with a wide range of potential solutions, then this suggests that the algorithm is still exploring the problem space and has not yet converged on the optimal solution. On the other hand, if the particles in the population are less diverse, with a narrow range of potential solutions, then this suggests that the algorithm is starting to converge on the optimal solution.

The rate of convergence of the algorithm can also be measured by examining the number of iterations required to reach the optimal solution. If the algorithm is able to find the optimal solution in a relatively small number of iterations, then this suggests that the algorithm is converging quickly. On the other hand, if the algorithm requires a large number of iterations to reach the optimal solution, then this suggests that the algorithm is converging slowly.

There are a number of factors that can affect the convergence of the algorithm in PSO. One of these factors is the quality of the initial particle population. If the initial particle population is of high quality, with a wide range of potential solutions, then this can help to ensure that the algorithm is able to explore the problem space effectively and find the optimal solution. On the other hand, if the initial particle population is of low quality, with a narrow range of potential solutions, then this can hinder the convergence of the algorithm.

Another factor that can affect the convergence of the algorithm is the size of the particle population. A larger particle population can help to ensure that the algorithm is able to explore the problem space effectively and find the optimal solution. However, a larger particle population can also increase the computational complexity of the algorithm, which may slow down the convergence process.

The convergence of the algorithm can also be affected by the choice of the PSO parameters. Different combinations of PSO parameters can lead to different convergence characteristics. For example, increasing the learning rate of the particles may help to speed up the convergence of the algorithm, but it may also increase the risk of the algorithm getting stuck in a local optimal solution.

In summary, the convergence of the algorithm in PSO is determined by how well the algorithm is able to find the optimal solution to a problem. This can be measured in a number of different ways, including the error between the current solution and the optimal solution, the diversity of the particle population, the number of iterations required to reach the optimal solution, and the quality of the initial particle population. The convergence of the algorithm can be affected by a variety of factors, including the size of the particle population, the choice of the PSO parameters, and the computational complexity of the algorithm.

Can PSO Be Used in Combination with Other Optimization Techniques, Such as Local Search Algorithms or Simulated Annealing?

Particle Swarm Optimization (PSO) is a computational optimization technique that is inspired by the social behavior of animals, such as birds and fish, which work together to find food or other resources.

In the context of optimization, PSO is used to find the best possible solution to a given problem by searching through a large set of potential solutions and choosing the one that maximizes a given objective function.

One of the main benefits of PSO is that it is relatively simple to implement and can be applied to a wide range of optimization problems. However, it does have some limitations, such as the inability to escape from local minima or the possibility of getting stuck in a suboptimal solution. To address these limitations, it is possible to use PSO in combination with other optimization techniques, such as local search algorithms or simulated annealing.

Local search algorithms are optimization techniques that explore the local neighborhood of a given solution in order to find better solutions. These algorithms typically start with a given initial solution and then iteratively improve it by making small changes to the solution. Local search algorithms can be used in combination with PSO to help the algorithm escape from local minima and improve the quality of the final solution.

For example, consider a problem in which we are trying to find the minimum value of a given objective function. If we use PSO alone, we may end up with a solution that is close to the minimum but not actually the minimum. By using a local search algorithm, we can explore the local neighborhood of the solution found by PSO and potentially find the true minimum value.

Simulated annealing is another optimization technique that can be used in combination with PSO. Simulated annealing is a metaheuristic optimization technique that is inspired by the annealing process used in metallurgy to harden materials. It works by starting with a given initial solution and gradually making random changes to the solution in order to find a better one. The probability of making a random change is gradually reduced over time, which helps to prevent the algorithm from getting stuck in a suboptimal solution.

When simulated annealing is used in combination with PSO, it can help the algorithm to escape from local minima and explore a larger portion of the search space. This can be particularly useful in cases where the search space is large and complex, as it allows the algorithm to find solutions that may not be reachable through traditional PSO techniques.

Overall, it is possible to use PSO in combination with other optimization techniques, such as local search algorithms and simulated annealing, to improve the quality and effectiveness of the optimization process. These techniques can be used to help PSO escape from local minima and explore a larger portion of the search space, which can lead to better solutions and improved performance. However, it is important to carefully consider the specific characteristics of the problem at hand when choosing which optimization techniques to use, as different techniques may be more or less effective in different situations.

How Does the Choice of the Initial Positions of the Particles in the Swarm Affect the Performance of PSO?

The choice of the initial positions of the particles in a particle swarm optimization (PSO) algorithm plays a significant role in the performance of the algorithm.

The initial positions of the particles can have a direct impact on the convergence speed and overall efficiency of the algorithm, as well as the quality of the solution found.

One of the primary factors that can affect the performance of PSO based on the initial positions of the particles is the distribution of the particles. If the particles are initially positioned too close together, they may become stuck in a local minimum and fail to find the global optimum solution. On the other hand, if the particles are positioned too far apart, they may take longer to converge on the optimal solution due to the increased search space. Ideally, the initial positions of the particles should be evenly distributed throughout the search space to ensure that the algorithm has a good chance of finding the global optimum solution.

Another factor that can impact the performance of PSO based on the initial positions of the particles is the diversity of the particles. If the particles are all initially positioned in a similar location, they may all follow similar paths and fail to explore the entire search space effectively. This can lead to slower convergence and a suboptimal solution. On the other hand, if the particles are initially positioned in diverse locations, they are more likely to explore a wider range of the search space and find a better solution more quickly.

The initial velocities of the particles can also affect the performance of PSO based on the initial positions. If the initial velocities are too high, the particles may move too quickly and fail to explore the search space effectively. On the other hand, if the initial velocities are too low, the particles may move too slowly and take longer to find the optimal solution. It is important to choose initial velocities that are appropriate for the specific problem being solved, as well as the initial positions of the particles.

The choice of the initial positions can also impact the convergence speed of the PSO algorithm. If the particles are initially positioned in a region of the search space that is close to the optimal solution, they are more likely to converge on the solution more quickly. On the other hand, if the particles are initially positioned in a region of the search space that is far from the optimal solution, they may take longer to converge. This can be especially important in situations where a fast convergence time is critical, such as in real-time control systems.

In summary, the choice of the initial positions of the particles in a PSO algorithm can significantly impact the performance of the algorithm. The initial positions should be chosen carefully to ensure that the particles are evenly distributed throughout the search space, are diverse, and have appropriate initial velocities. This can help to ensure that the algorithm converges quickly and finds the optimal solution.

What Are Some Common Challenges or Limitations of Using PSO for Optimization Tasks?

Particle Swarm Optimization (PSO) is a popular optimization technique used in various fields such as machine learning, engineering, and finance. It is a heuristic optimization algorithm that is inspired by the collective behavior of birds and insects.

PSO has several advantages such as fast convergence, easy implementation, and the ability to handle large-dimensional problems. However, there are also several challenges and limitations associated with using PSO for optimization tasks.

One of the main challenges of using PSO is the sensitivity of the algorithm to the initial conditions. The performance of the algorithm depends heavily on the initial positions and velocities of the particles in the swarm. If the initial conditions are not chosen properly, the algorithm may get stuck in a local minimum or may not converge to the global optimum. This can be a problem in real-world applications where the initial conditions may not be known accurately.

Another challenge is the lack of control over the convergence rate of the algorithm. PSO is known to converge faster than many other optimization algorithms, but the rate of convergence can be unpredictable. The algorithm may converge to a good solution quickly in some cases, but it may take a long time in others. This makes it difficult to set a fixed time limit for the optimization process.

Another limitation of PSO is the inability to handle constraints. The algorithm does not have any built-in mechanism to handle constraints such as bounds on the variables or linear or nonlinear equality or inequality constraints. This makes it difficult to use PSO for optimization problems that have constraints.

Another challenge is the difficulty in selecting the appropriate values for the hyperparameters of the algorithm. PSO has several hyperparameters such as the inertia weight, the acceleration constants, and the swarm size. These hyperparameters play a crucial role in the performance of the algorithm, but it is often difficult to determine the optimal values for these hyperparameters. This can lead to suboptimal performance or even failure of the algorithm.

Another limitation of PSO is its reliance on random initialization. The algorithm relies on random initialization of the particles in the swarm, which means that the results of the optimization process may vary each time the algorithm is run. This can be a problem in cases where the optimization results need to be reproducible.

Another challenge of using PSO is the lack of interpretability of the optimization process. The algorithm does not provide any insight into the optimization process, which makes it difficult to understand the reasons behind the optimization results. This can be a problem in cases where the optimization results need to be explained to stakeholders or decision-makers.

In conclusion, PSO is a powerful optimization technique that has several advantages, but it also has several challenges and limitations. Some of the common challenges and limitations of using PSO for optimization tasks include sensitivity to the initial conditions, unpredictable convergence rate, inability to handle constraints, difficulty in selecting the appropriate hyperparameters, reliance on random initialization, and lack of interpretability of the optimization process. Despite these challenges and limitations, PSO can still be an effective tool for solving many optimization problems if used appropriately.

PSO IN SEARCH ENGINE MODELS

How Particle Swarm Optimization is Used in Search Engine Models

Particle Swarm Optimization (PSO) is a computational method that is used to optimize complex systems and through the use of transfer learning, help find the optimal solution to a particular problem. It is based on the idea of social learning and borrows its inspiration from the behavior of swarms of animals, such as birds or bees, which move in a coordinated manner to search for food or other resources.

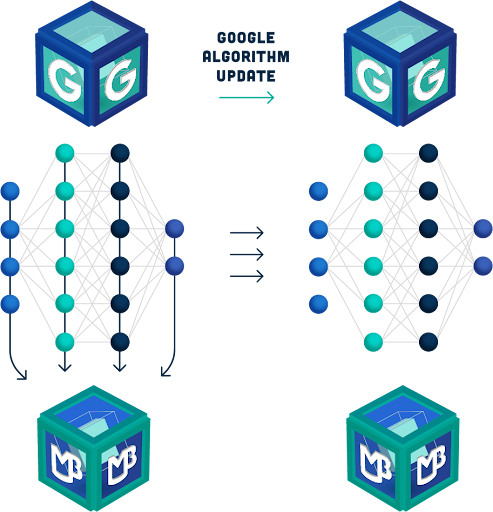

Market Brew's search engine models use PSO to fine-tune the bias and weight settings of its algorithms that are used to rank and sort search results. These settings are crucial to the accuracy and relevance of its search results, and they need to be carefully calibrated in order to simulate the target search engine and achieve the desired performance.

The process of using PSO to optimize search engine models begins by defining the objective function that needs to be optimized. This function represents the performance of the target search engine results, and it is used to evaluate the quality of the search results produced by the model. The objective function is based on factors such as the relevance of the search results, the number of relevant results that are returned, the order of sites, how well the landing page from each site is represented, and more.

Once the objective function has been defined, the PSO algorithm is used to search for the optimal bias and weight settings that will produce the best possible results. This is done by creating a population of "particles" that represent different combinations of bias and weight settings. These particles are then allowed to move through the search space, guided by the objective function and their own experiences.

As the particles move through the search space, they are influenced by two factors: the "personal best" position of each particle, which represents the best combination of bias and weight settings that the particle has encountered so far, and the "global best" position, which represents the best combination of bias and weight settings found by any particle in the population.

The particles use these two factors to guide their movement through the search space, and they adjust their bias and weight settings based on the relative strengths of these two factors. This allows them to explore the search space more efficiently and to converge on the optimal solution more quickly.

As the particles move through the search space, they also communicate with one another, sharing information about their experiences and their current positions. This allows them to learn from one another and to work together to find the best possible solution.

Once the particles have converged on the optimal solution, the search engine model is calibrated to match the target search engine's behavior. This means that the model produces search results that are similar in quality and relevance to the results produced by the target search engine, and many algorithmic correlations can be used to direct the SEO strategy.

Overall, PSO is a powerful and effective tool for optimizing search engine models whenever the target search engine changes its algorithms.

By using PSO to calibrate the bias and weight settings of its algorithms, Market Brew's search engine models are able to test SEO optimizations with results that are accurate, relevant, and highly targeted, helping users find the information they need quickly and easily.

Ready to Take Control of Your SEO?

See how Market Brew's predictive SEO models and expert team can unlock new opportunities for your site. Get tailored insights on how we can help your business rise above the competition.

Schedule a free demonstration today and discover how we engineer SEO success.

You may also like

News

Google Says… Market Brew Sees

Guides & Videos

Link Earning Sustainability for SEO

Guides & Videos