Robots Exclusion Standard and Its Role in Web Crawling

In the world of search engines and web crawling, the use of robots exclusion standards is a crucial aspect of ensuring that web pages are indexed and ranked properly.

This article provides an in-depth look at how these standards work and how they are used by search engines to manage the flow of web crawlers. We will also explore some of the challenges and limitations of robots exclusion, as well as its potential impact on the overall effectiveness of search engines.

Web crawling is an essential part of the search engine process. By sending out web crawlers, or "spiders," search engines are able to discover new web pages and add them to their index. This allows users to find relevant information when they search for specific keywords or phrases.

However, not all web pages are suitable for crawling and indexing by search engines. For example, pages that are password-protected or those that contain sensitive information may not want to be included in search engine results. In these cases, webmasters can use robots exclusion standards to prevent search engines from crawling and indexing their pages.

Robots exclusion standards, also known as robots.txt, is a text file that is placed on a web server and specifies which pages or files on that server should not be crawled by web crawlers. By including this file on their server, webmasters can control which pages are indexed by search engines and which are not.

How Search Engines Use the Robots Exclusion Standard

The Robots Exclusion Standard is a widely used protocol that allows website owners to communicate with search engines about which parts of their website should be crawled and indexed. This standard is implemented through the use of a robots.txt file and META Robots tags, which provide specific instructions to search engine bots.

The robots.txt file is a text file that is placed in the root directory of a website. This file contains a list of URLs that should not be accessed by search engine bots. For example, if a website owner does not want search engines to crawl their login page, they would include the URL for that page in the robots.txt file.

On the other hand, META Robots tags are HTML tags that can be added to individual pages on a website. These tags provide more granular control over which pages should be crawled and indexed by search engines. For example, a website owner may want to allow search engines to crawl and index their homepage, but prevent them from crawling and indexing a specific product page. In this case, the homepage would include a META Robots tag with the "index" attribute, and the product page would include a META Robots tag with the "noindex" attribute.

Search engine bots, also known as spiders or crawlers, regularly visit websites to crawl and index their content. When a search engine bot visits a website, it will first check for the presence of a robots.txt file. If the file exists, the bot will parse the file and use the instructions to determine which pages it should not crawl.

If a search engine bot encounters a META Robots tag on a page, it will also follow the instructions provided in the tag. For example, if the tag includes the "noindex" attribute, the bot will not crawl or index that page. However, if the tag includes the "index" attribute, the bot will continue to crawl and index the page.

In addition to providing instructions to search engine bots, the Robots Exclusion Standard also includes rules for how bots should handle certain scenarios. For example, if a bot encounters a page with a META Robots tag that conflicts with the instructions in the robots.txt file, the bot should follow the instructions in the META Robots tag.

Overall, the Robots Exclusion Standard is a crucial tool for website owners to control which parts of their website are crawled and indexed by search engines. By using a robots.txt file and META Robots tags, website owners can ensure that only relevant and valuable content is included in search engine results. This helps improve the user experience and ensures that search engines provide accurate and useful results to their users.

What is Robots.txt

A robots.txt file is a text file that is used to instruct web crawlers and other bots on how to access and index the pages on a website. The file is placed in the root directory of a website, and it specifies which pages or files the bots are allowed to access and index, and which ones they are not allowed to access.

The robots.txt file is important for website owners because it helps them control which pages on their website are indexed by search engines. By using the file, website owners can prevent their website from being indexed by search engines, or they can block specific pages or files from being indexed. This can be useful for a number of reasons, such as preventing the duplication of content, protecting sensitive information, or optimizing the website's search engine rankings.

The robots.txt file uses a specific syntax and format to specify which pages or files are allowed or disallowed. The file starts with the line "User-agent: *" which specifies which bots the rules apply to. This line is followed by one or more "Allow" or "Disallow" lines, which specify which pages or files the bots are allowed or disallowed to access.

For example, the following robots.txt file would prevent all bots from accessing any pages on the website:

User-agent: *

Disallow: /

On the other hand, the following robots.txt file would allow all bots to access all pages on the website:

User-agent: *

Allow: /

It's important to note that the robots.txt file is only a suggestion, and not all bots will necessarily follow the rules specified in the file. Some bots, such as search engine crawlers, will generally respect the rules in the file, but others, such as malicious bots, may ignore the rules and access the website regardless.

In addition to the "Allow" and "Disallow" lines, the robots.txt file can also include a number of other directives, such as "Sitemap" and "Crawl-delay". The "Sitemap" directive can be used to specify the location of the website's sitemap, which is a file that provides a list of all the pages on the website. The "Crawl-delay" directive can be used to specify how long the bot should wait between requests to the website.

In conclusion, the robots.txt file is an important tool for website owners to control how their website is indexed by search engines and other bots. By using the file, website owners can prevent their website from being indexed, or they can block specific pages or files from being indexed. While the robots.txt file is only a suggestion, most bots will generally respect the rules specified in the file.

How to use META Robots

META Robots is an HTML tag used to instruct search engines on how to index and crawl a website. The tag is placed in the head section of the HTML code and can be used to specify which pages should be indexed and which should be excluded from search engine results.

One of the most common uses of the META Robots tag is to prevent search engines from indexing pages that are not intended for public viewing, such as login or registration pages. This can help prevent these pages from appearing in search engine results, which can improve the overall user experience and protect sensitive information.

The META Robots tag can also be used to specify whether search engines should follow links on a page. This can be useful for preventing search engines from crawling pages that are not relevant to the content of the website, such as pages with low-quality content or pages that are not linked to other pages on the site.In addition to controlling indexing and crawling, the META Robots tag can also be used to specify the location of the XML sitemap for a website. This can help search engines more easily find and index the pages on a website, improving the overall visibility and ranking of the site in search engine results.

There are several different values that can be used with the META Robots tag, each with a specific purpose. The "index" value tells search engines to index the page, while the "noindex" value tells search engines not to index the page. The "follow" value tells search engines to follow links on the page, while the "nofollow" value tells search engines not to follow links on the page.

The META Robots tag can also be used in conjunction with other tags, such as the "noodp" tag, which prevents search engines from using the Open Directory Project description for a page in search engine results. This can help ensure that the description of a page in search engine results accurately reflects the content of the page.

Overall, the META Robots tag is an important tool for controlling how search engines index and crawl a website. By using the tag correctly, website owners can improve the visibility and ranking of their site in search engine results, as well as protect sensitive information and improve the user experience.

Important Considerations of the Robots Exclusion Standard

While the use of meta robots and robots exclusion can be useful for webmasters in some cases, it can also lead to PageRank evaporation. PageRank evaporation is a term used in search engine optimization (SEO) to describe the loss of pagerank (PR) on a webpage due to the use of META robots attribute "nofollow".

Adding this attribute to a link on a page can be viewed as a non-recoverable sink that does not redistribute itself to the rest of the links on the page.

Another pitfall is when a META noindex attribute has been set for a page. It is advised that instead of nofollowing a page in your site, you simply canonicalize or 301 redirect instead, which preserves the PageRank in the site.

Think of a nofollowed page as a node in the link graph of the site: it still takes up a portion of the site's PageRank; however, since it is noindexed, it will never contribute to the site's traffic or revenue.

With regards to adding nofollow tags on a link, don't do it. Based on Market Brew's 15 years of modeling link algorithms, we believe the data shows that PageRank is NOT redistributed to other links on that webpage when nofollow tags on the link are present.

This means that the link is essentially treated as a special dangling link on the page, in which that link flow share becomes a lost share. So regardless of whether the link is followed or not, the amount of link flow share is lost externally either way (either to the external site or it falls into a black hole so to speak).

If you want to avoid external link loss, instead of adding nofollow links, which doesn't redistribute link flow to other links on the page, you can adjust many of the link characteristics that determine the link flow share of that link, such as relevance, size, position, et al.

Another better solution to avoiding external link loss would be to adjust the page hierarchy such that a smaller ratio of link flow goes to the portion of your site where you have lots of external links. That way, the external link loss is less overall for the site. To do this, find the parent pages of these theme pages and reduce the internal link value going to these pages. Again, by adjusting the link characteristics or reducing / consolidating the number of links.

Refer to the PageRank Evaporation information below:

Robots.txt

- Crawled? No

- Appears in SERP? No

- PR Evaporation? Yes, all PageRank is evaporated.

META Robots "noindex"

- Crawled? Yes

- Appears in SERP? No

- PR Evaporation? No, if the links of the page are followed.

META Robots "nofollow"

- Crawled? Yes, if other followed links are found pointing to the target page.

- Appears in SERP? Yes, if the target page receives links from other webpages.

- PR Evaporation? Yes, all of the PageRank of the page is evaporated. The webpage still takes up a node in the link graph.

rel="nofollow"

- Crawled? Yes, if other followed links are found pointing to the target page.

- Appears in SERP? Yes, if the target page receives links from other webpages.

- PR Evaporation? Yes, some amount of PageRank of the page is evaporated. Link Flow is NOT redistributed to other links on that webpage.

Modeling the Robots Exclusion Standard in Market Brew

Modeling the Robots Exclusion Standard in Market Brew

Market Brew follows the Robots Exclusion Standard, which includes abiding by the Robots.txt directives and any META Robots attributes that it finds within the HTML of a web page.

Pages that have been annotated as blocked or otherwise by the Robots Exclusion Standard can affect the Link Flow Distribution of a site.

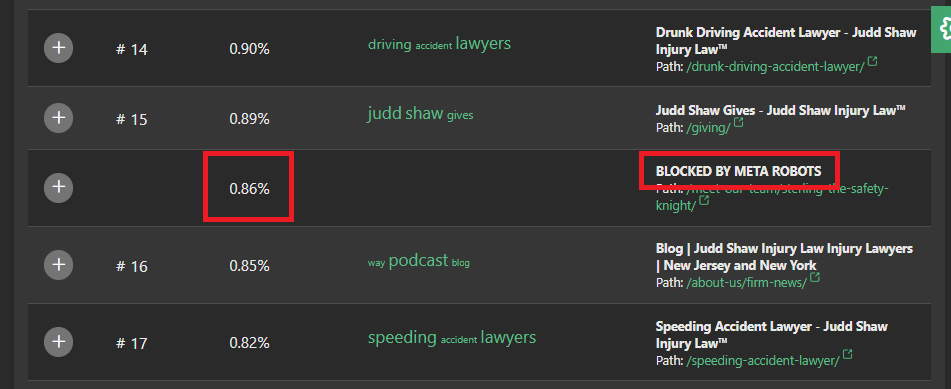

On the Link Flow Distribution screen, Market Brew users can see how a META Robots blocked page can still take up link flow within the site's link graph.

Pages that have been blocked via Robots.txt will NOT show on this screen, as they do not take up a node in the link graph. However, be aware that any incoming link structure to these pages do NOT continue into the site, as all links on these types of blocked pages will not be transferred to pages that it links to.

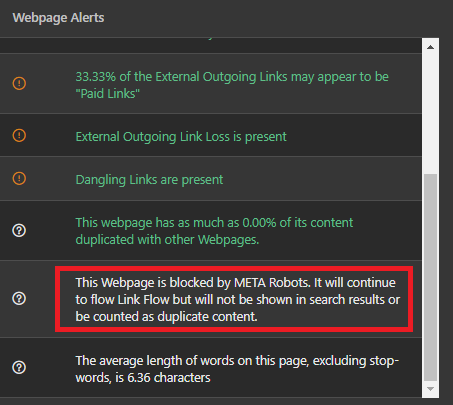

Alerts on the Webpage Scoresheet screens will notify users of any Robots Exclusion that has taken place, and the implications of such.

With Market Brew's advanced search engine modeling, users will know exactly what search engines see, how the link graph is affected, how duplicate content penalties are adjusted, and more.

You may also like

Guides & Videos

Leveraging Text Summarization for SEO

Guides & Videos

Search Engine Indexing How It Works

Guides & Videos