Using Text Classification Within SEO

Text classification is a machine learning technique that involves assigning predefined categories or labels to text data. In this article, we will explore the use of text classification in search engine optimization (SEO) and discuss its potential benefits and challenges.

We will examine common techniques for text classification in SEO and discuss how to choose the right classification algorithm for a given task. We will also address issues such as handling imbalanced datasets and missing or incomplete data.

By the end of this article, readers will have a better understanding of the role of text classification in SEO and how it can be used to improve a website's ranking on search engines.

Text classification is a machine learning technique that involves assigning predefined categories or labels to text data. It has a wide range of applications, including search engine optimization (SEO). In this article, we will explore the use of text classification in SEO and discuss its potential benefits and challenges.

We will examine common techniques for text classification in SEO, such as keyword analysis and link building, and discuss how to choose the right classification algorithm for a given task. We will also address issues such as handling imbalanced datasets and missing or incomplete data.

By the end of this article, readers will have a better understanding of the role of text classification in SEO and how it can be used to improve a website's ranking on search engines.

What Is Text Classification, and How Does It Work?

Text classification is a machine learning task that involves assigning predefined categories or labels to text data. It is a supervised learning task, meaning that the model is trained on a labeled dataset, where each example is a piece of text and its corresponding label.

Some common applications of text classification include spam detection, sentiment analysis, and topic classification.

Text classification involves several steps, including preprocessing the text data, extracting features from the text, and training a classifier on the extracted features.

Before the text data can be used for classification, it must be preprocessed. This typically involves cleaning the text by removing any unnecessary characters or words, and converting the text to a standard format such as lowercase. Preprocessing may also involve stemming or lemmatizing the text, which involves reducing words to their base form so that words with the same meaning but different inflections are treated as the same word. For example, the words "run," "ran," and "running" would all be reduced to the base form "run."

Once the text data has been preprocessed, the next step is to extract features from the text. The most common method for extracting features from text is called bag-of-words (BOW). This method involves creating a vocabulary of all the unique words in the text dataset, and then representing each piece of text as a vector of word counts. For example, if the vocabulary consists of the words "cat," "dog," and "bird," and the text is "The cat chased the bird," the resulting feature vector would be [1, 0, 1]. This approach treats each word as a separate feature, and does not take into account the order of the words or their context within the text.

Other methods for extracting features from text include n-grams, which consider groups of words rather than individual words, and term frequency-inverse document frequency (TF-IDF), which takes into account the importance of each word within the text dataset.

Once the features have been extracted from the text, the next step is to train a classifier on the features. There are many different types of classifiers that can be used for text classification, including logistic regression, support vector machines (SVMs), and neural networks. The classifier is trained on the labeled dataset, and learns to predict the correct label for a given piece of text based on the features extracted from the text.

During the training process, the classifier is presented with a set of labeled examples and adjusts its parameters to maximize the accuracy of its predictions. The classifier is then tested on a separate set of examples to evaluate its performance. If the classifier performs poorly on the test set, it may be necessary to adjust the features being used or try a different classifier.

Once the classifier has been trained and tested, it can be used to classify new pieces of text. The classifier takes in the features extracted from the text and outputs a prediction for the label. The accuracy of the classifier's predictions will depend on the quality of the training data and the complexity of the classification task.

Text classification is a useful tool for analyzing and organizing large volumes of text data. It can be used to identify the sentiment of social media posts, filter spam emails, or classify news articles by topic. With the increasing amount of text data available, text classification will continue to be an important area of machine learning research and development.

How Is Text Classification Used in Search Engine Optimization (SEO)?

Text classification is a process of categorizing text data into predefined categories or labels. It is widely used in various fields such as natural language processing, information retrieval, and machine learning.

In search engine optimization (SEO), text classification is used to analyze and classify the content of a website or web page in order to improve its visibility and ranking in search engine results.

There are various ways in which text classification is used in SEO:

- Keyword classification: Keywords are the terms that users type into search engines to find relevant information. SEO involves identifying the most relevant and popular keywords for a particular website or web page and incorporating them into the content. Text classification is used to classify the keywords based on their relevance and popularity, and to determine the keyword density in the content. This helps to optimize the content for search engines and improve its ranking in search results.

- Content classification: Text classification is also used to classify the content of a website or web page based on its topic, genre, or purpose. This helps to identify the most relevant and popular content on the website and optimize it for search engines. For example, a website that covers various topics such as fashion, lifestyle, and technology can use text classification to identify the most popular content in each category and optimize it for search engines.

- Sentiment analysis: Text classification is also used to analyze the sentiment of a website or web page. Sentiment analysis is the process of determining the overall sentiment or emotion of a piece of text, whether it is positive, negative, or neutral. This is useful for SEO as it helps to determine the popularity of a website or web page among users and its overall reputation. For example, if a website or web page has a high number of positive reviews and ratings, it is likely to rank higher in search results.

- Link classification: Text classification is also used to classify the links on a website or web page based on their relevance and quality. This is important for SEO as search engines use links to determine the authority and credibility of a website or web page. High-quality links from reputable websites are more likely to improve the ranking of a website or web page in search results.

- Search query classification: Text classification is also used to classify search queries based on their user intent or purpose. This helps to optimize the content of a website or web page for specific search queries and improve its ranking in search results. For example, if a website or web page is optimized for the search query "best laptops for gaming," it is more likely to rank higher in search results for that query.

- Video classification: Videos are now being transcribed, which means each video can be classified based on the keywords and entities in the transcriptions. Video SEO is a specific type of SEO that is being used to take advantage of text classification for video transcripts.

Overall, text classification is an important tool for SEO as it helps to analyze and classify the content of a website or web page in order to optimize it for search engines and improve its ranking in search results.

By using text classification to identify the most relevant and popular keywords, content, and links, and to analyze the sentiment and intent of search queries, SEO professionals can effectively optimize their websites and web pages for search engines and drive more traffic to their sites.

What Are Some Common Techniques for Text Classification in SEO?

Text classification is a crucial aspect of search engine optimization (SEO) as it helps to categorize and organize web content according to relevant keywords and topics. This allows search engines to better understand and index a website, resulting in higher search rankings and more targeted traffic.

There are several common techniques used in text classification for SEO, including:

- Keyword analysis: This involves identifying and targeting specific keywords and phrases that are relevant to the website's content and desired audience. This can be done through keyword research tools such as Google's Keyword Planner or SEMrush.

- Latent semantic analysis (LSA): LSA is a technique that uses mathematical algorithms to identify the underlying meaning and relationships between words in a document. This allows for more accurate classification of content and improved search results.

- Latent Dirichlet allocation (LDA): Similar to LSA, LDA is a mathematical algorithm that is used to identify the topics and themes present in a document. It is commonly used in text classification for SEO to improve the accuracy and relevance of search results.

- Stemming: Stemming is the process of reducing words to their base form, or stem, in order to better classify and categorize content. For example, the stem of "run," "running," and "ran" would be "run." This allows for more accurate classification of content, as the search engine will recognize these words as being related and relevant to the same topic.

- Part-of-speech tagging: Part-of-speech tagging involves identifying the grammatical role of each word in a document, such as a noun, verb, or adjective. This can be used to better classify and organize content, as certain words or phrases may have different meanings depending on their grammatical role.

- Named entity recognition: This technique involves identifying and extracting specific named entities, such as people, organizations, and locations, from a document. This can be useful in classifying content and improving search results, as named entities are often relevant to specific topics or keywords.

- Sentiment analysis: Sentiment analysis involves analyzing the emotional tone of a document, such as positive, negative, or neutral. This can be used in text classification for SEO to identify and classify content based on its emotional tone, which can impact how it is perceived and ranked by search engines.

- Text clustering: Text clustering involves grouping documents together based on their content and similarity. This can be useful in text classification for SEO as it allows for more accurate and relevant search results, as similar documents are grouped together.

Overall, text classification is an important aspect of SEO as it helps to organize and categorize web content in a way that is easily understood by search engines. By utilizing techniques such as keyword analysis, LSA, LDA, stemming, part-of-speech tagging, named entity recognition, sentiment analysis, and text clustering, website owners can improve their search rankings and attract more targeted traffic to their site.

How Can Text Classification Improve a Website's Ranking on Search Engines?

Text classification is a process of categorizing text documents into different predefined categories or classes based on their content. This process can be used to improve the ranking of a website on search engines by allowing search engines to better understand and classify the content on the website.

One of the main factors that search engines use to rank websites is the relevance of the content on the website to the search query. By accurately classifying the content on a website, search engines can better understand the topics and themes of the content and how it relates to specific search queries. This can help improve the ranking of the website for relevant search queries and increase the visibility of the website on search engines.

Text classification can also help improve the user experience of a website by organizing and categorizing the content on the website into relevant categories. This can make it easier for users to find the information they are looking for and navigate the website, leading to higher engagement and a lower bounce rate. A lower bounce rate is a positive signal to search engines that the website is providing valuable and relevant content, which can also contribute to a higher ranking.

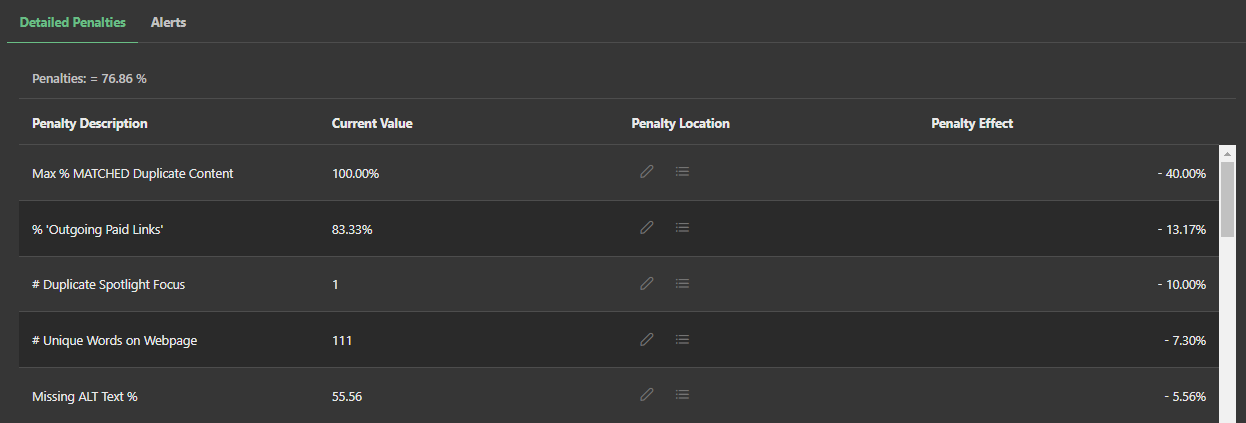

In addition, text classification can help identify duplicate or spammy content on a website, which can negatively impact the ranking of the website on search engines. By accurately classifying the content on a website, it is possible to identify and remove duplicate or spammy content, improving the overall quality and relevance of the website.

There are several techniques that can be used for text classification, including manual classification, machine learning algorithms, and natural language processing (NLP). Manual classification involves manually reviewing and categorizing the content on a website, which can be time-consuming and error-prone. Machine learning algorithms, on the other hand, use algorithms to automatically classify the content based on patterns and features identified in the data. NLP involves using artificial intelligence and machine learning to analyze and understand the meaning and context of the text.

One way to improve the accuracy of text classification is to use a combination of these techniques. For example, a website could use manual classification to identify the main topics and themes of the content and then use machine learning algorithms to classify the content based on these themes. This can help improve the accuracy and efficiency of the classification process.

There are also several tools and resources available for text classification, including open-source software, cloud-based platforms, and pre-trained models. These tools can make it easier for website owners to implement text classification on their websites and improve the ranking of their website on search engines.

In conclusion, text classification can significantly improve the ranking of a website on search engines by allowing search engines to better understand and classify the content on the website. This can increase the relevance and visibility of the website for relevant search queries and improve the user experience of the website. By using a combination of manual classification, machine learning algorithms, and NLP, website owners can accurately and efficiently classify the content on their website and improve the ranking of their website on search engines.

Can Text Classification Be Used to Identify Spam or Low-Quality Content on a Website?

Text classification is a machine learning technique that can be used to identify and categorize text into predefined classes or categories. This technique has been widely used in various applications such as sentiment analysis, language identification, and spam detection. In this context, we will explore the potential of using text classification to identify spam or low-quality content on a website.

Spam detection is a crucial task in the online world, as it helps to protect users from fraudulent or malicious activities. Spam emails, comments, and posts can cause a lot of inconvenience to users and also damage the reputation of a website. Therefore, it is essential to have an effective mechanism to detect and eliminate spam content. Text classification can be used to identify spam in different forms, including emails, social media posts, and online comments.

One of the primary methods to use text classification for spam detection is to create a dataset of spam and non-spam messages. The dataset should include a large number of spam and non-spam messages, and each message should be labeled as spam or non-spam. Once the dataset is prepared, a machine learning model can be trained on it to learn the characteristics of spam and non-spam messages. The model can then be used to classify new messages as spam or non-spam.

There are various features that can be used to classify spam messages using text classification. Some of the common features include the presence of certain words or phrases, the length of the message, the use of capital letters, and the presence of links or attachments. The machine learning model can be trained to identify these features and use them to classify messages as spam or non-spam.

The accuracy of text classification for spam detection depends on the quality of the dataset and the machine learning model used. It is essential to have a large and diverse dataset to train the model and improve its accuracy. The model should also be tested on a separate dataset to evaluate its performance. The model's performance can be improved by adjusting the hyperparameters and using advanced techniques such as deep learning.

Another deep learning technique is transfer learning, which is used in search engines for classification and labeling. Pre-trained models can be used to classify and label web pages based on their content, such as news articles, products, or videos. This information can then be used to improve the ranking of search results based on relevance, as well as to categorize search results into different categories or topics.

In addition to spam detection, text classification can also be used to identify low-quality content on a website. Low-quality content can be defined as content that does not provide value to the users or does not meet the standards of the website. Low-quality content can damage the reputation of a website and reduce its traffic and engagement.

To use text classification for identifying low-quality content, a dataset of high-quality and low-quality content should be created. The dataset should include a large number of high-quality and low-quality content, and each piece of content should be labeled as high-quality or low-quality. A machine learning model can then be trained on this dataset to learn the characteristics of high-quality and low-quality content.

There are various features that can be used to classify high-quality and low-quality content using text classification. Some of the common features include the length of the content, the use of relevant keywords, the presence of proper grammar and spelling, and the presence of credible sources. The machine learning model can be trained to identify these features and use them to classify content as high-quality or low-quality.

The accuracy of text classification for identifying low-quality content depends on the quality of the dataset and the machine learning model used. It is essential to have a large and diverse dataset to train the model and improve its accuracy. The model should also be tested on a separate dataset to evaluate its performance. The model's performance can be improved by adjusting the hyperparameters and using advanced techniques such as deep learning.

In conclusion, text classification can be used effectively to identify spam and low-quality content on a website.

How Do You Choose the Right Classification Algorithm for a Given Text Classification Task in SEO?

When it comes to text classification in SEO, choosing the right algorithm is crucial to ensure that your classification is accurate and effective. There are various algorithms available, and each has its own strengths and weaknesses, so it is essential to carefully consider the specific needs of your task in order to choose the right one.

One of the first things to consider when choosing a classification algorithm is the nature of the text you will be classifying. If your text is relatively short and straightforward, with clear and distinct categories, then a simple algorithm such as Naive Bayes or Logistic Regression may be sufficient. On the other hand, if your text is more complex, with many overlapping categories or a large amount of data, then a more sophisticated algorithm such as Support Vector Machines (SVMs) or Artificial Neural Networks (ANNs) may be required.

Another important factor to consider is the amount of data you have available for training your classification model. If you have a large amount of data, then you may be able to use more complex algorithms that require more training data, such as SVMs or ANNs. However, if you have a small amount of data, then you may need to opt for a simpler algorithm that is more efficient with smaller datasets, such as Naive Bayes or Logistic Regression.

It is also important to consider the complexity of the classification task itself. If your task is relatively simple, with clear distinctions between categories, then a simpler algorithm such as Naive Bayes or Logistic Regression may be sufficient. However, if your task is more complex, with many overlapping categories or a large number of variables, then a more sophisticated algorithm such as SVMs or ANNs may be required.

Another key factor to consider when choosing a classification algorithm is the level of interpretability you require. Some algorithms, such as Naive Bayes and Logistic Regression, are relatively simple and easy to interpret, while others, such as SVMs and ANNs, can be more complex and difficult to understand. If you need to be able to explain and understand the results of your classification model, then you may want to choose a more interpretable algorithm.

Finally, it is important to consider the resources available to you, including your time and computing power. Some algorithms, such as SVMs and ANNs, can be time-consuming and resource-intensive to train and use, so if you have limited time or computing power, you may need to opt for a simpler algorithm.

Overall, choosing the right classification algorithm for a given text classification task in SEO requires careful consideration of the specific needs and constraints of your task. By taking into account the nature of the text, the amount of data available, the complexity of the task, the level of interpretability required, and the resources available to you, you can select an algorithm that is well-suited to your needs and will help you achieve accurate and effective classification results.

How Do You Measure the Effectiveness of a Text Classification Model in SEO?

Measuring the effectiveness of a text classification model in SEO involves evaluating how accurately the model is able to classify texts or documents into the correct categories or labels. This can be done through various metrics such as precision, recall, and F1 score, as well as through real-world applications such as keyword classification and content categorization.

One way to measure the effectiveness of a text classification model is through precision, which refers to the percentage of predicted positive instances that are actually positive. For example, if a model is predicting which texts contain a certain keyword and it correctly predicts the presence of the keyword in 90% of the texts, its precision would be 90%. A high precision indicates that the model is able to accurately identify the presence of the keyword in texts, but it may not be able to capture all instances of the keyword.

Another metric to consider is recall, which refers to the percentage of actual positive instances that are correctly predicted by the model. In the example above, if the model is able to correctly predict the presence of the keyword in 80% of the texts that actually contain the keyword, its recall would be 80%. A high recall indicates that the model is able to capture a high percentage of the instances of the keyword, but it may also include some false positives.

The F1 score is a combination of precision and recall, and is calculated by taking the harmonic mean of the two. A high F1 score indicates a balance between precision and recall, and a model with a high F1 score is able to accurately identify the presence of the keyword in texts while also minimizing false positives.

In addition to these metrics, the effectiveness of a text classification model can also be measured through its real-world applications. For example, in keyword classification, a model can be used to identify the presence of specific keywords in texts and classify them accordingly. This can be useful for SEO purposes as it allows for more accurate targeting of specific keywords and can help improve the ranking of a website in search engine results.

Content categorization is another application of text classification in SEO. A model can be used to classify texts or documents into specific categories based on their content, which can help with organizing and sorting website content and improving user experience. For example, a model could classify texts as belonging to categories such as "news," "entertainment," or "finance," and this categorization could be used to help users easily find the content they are looking for on a website.

In order to measure the effectiveness of a text classification model in these applications, it is important to consider the specific goals and needs of the project. For example, in keyword classification, it may be more important to prioritize precision in order to minimize false positives and avoid targeting the wrong keywords. In content categorization, it may be more important to prioritize recall in order to ensure that all relevant texts are correctly classified.

Overall, there are various metrics and real-world applications that can be used to measure the effectiveness of a text classification model in SEO. By evaluating the precision, recall, and F1 score of a model, as well as its effectiveness in applications such as keyword classification and content categorization, it is possible to determine how well the model is able to accurately classify texts and documents for SEO purposes.

Can Text Classification Be Combined with Other SEO Techniques, Such as Keyword Analysis or Link Building?

Text classification is the process of categorizing text data into predetermined categories or labels. It is commonly used in natural language processing (NLP) and machine learning applications to extract information from unstructured data and improve search accuracy.

One way text classification can be combined with other SEO techniques is through keyword analysis. Keyword analysis involves identifying and analyzing the words and phrases that users enter into search engines to find relevant websites. By using text classification algorithms, businesses can classify and organize their website content based on keywords, making it easier for search engines to understand the context and relevance of their content.

For example, if a business has a website that sells clothing, they may use text classification to categorize their products into categories such as "women's clothing," "men's clothing," and "children's clothing." By using these labels, the business can optimize their website for specific keywords related to each category, making it easier for search engines to understand the context of the content and improve its ranking in search results.

Text classification can also be combined with link building, which is the process of acquiring backlinks from other websites to improve the credibility and authority of a website. By using text classification algorithms to analyze the content of external websites, businesses can identify websites that are relevant to their industry and potentially worth acquiring backlinks from, avoiding many of the link penalties that may otherwise doom a backlink campaign from the start.

For example, if a business has a website that sells outdoor gear, they may use text classification to analyze the content of external websites in the outdoor industry to identify potential link building opportunities. By acquiring backlinks from these websites, the business can improve the credibility and authority of their website, which can ultimately lead to higher search engine rankings.

In addition to keyword analysis and link building, text classification can also be combined with other SEO techniques such as content optimization and on-page optimization. Content optimization involves creating high-quality, relevant content that is optimized for specific keywords. By using text classification algorithms to classify and categorize website content, businesses can identify areas where their content may be lacking and create new content that is optimized for specific keywords.

On-page optimization involves optimizing the elements of a website that are visible to search engines, such as the title tags, meta descriptions, and header tags. By using text classification algorithms to analyze website content, businesses can identify opportunities to optimize their on-page elements for specific keywords, leading to improved search engine rankings.

Overall, text classification can be a powerful tool for businesses looking to improve their SEO efforts. By combining text classification with other SEO techniques such as keyword analysis, link building, content optimization, and on-page optimization, businesses can improve the visibility and credibility of their website, leading to increased traffic and higher search engine rankings.

How Do You Handle Imbalanced Datasets in Text Classification for SEO?

Imbalanced datasets can present a challenge in text classification for SEO, as they can skew the results and lead to inaccurate or biased predictions. In order to effectively handle imbalanced datasets in text classification for SEO, there are several approaches that can be taken.

One approach is to oversample the minority class. This involves increasing the number of instances of the minority class in the dataset by generating synthetic samples or duplicating existing ones. This can help balance the dataset and improve the performance of the classifier. However, it is important to be cautious with oversampling, as it can also lead to overfitting if not done properly.

Another approach is to undersample the majority class. This involves reducing the number of instances of the majority class in the dataset by randomly selecting a subset of the majority class samples. This can also help balance the dataset and improve the performance of the classifier. However, it is important to be careful with undersampling, as it can also lead to a loss of important information and a decrease in the overall accuracy of the classifier.

A third approach is to use weighting or sampling techniques to adjust the classifier's decision function. This can involve assigning higher weights to the minority class samples or applying sampling techniques such as SMOTE (Synthetic Minority Over-sampling Technique) or ADASYN (Adaptive Synthetic Sampling) to generate synthetic samples of the minority class. These techniques can help the classifier to better identify the minority class and improve its performance.

Another option is to use ensemble methods, which involve training multiple classifiers and combining their predictions to make a final prediction. Ensemble methods can be effective in handling imbalanced datasets, as they can better capture the complexity of the data and reduce the impact of individual classifiers that may be biased towards the majority class.

In addition to these approaches, it is also important to consider the evaluation metrics being used in text classification for SEO. In imbalanced datasets, accuracy is not always the most appropriate evaluation metric, as it can be misleading due to the disproportionate number of samples in the majority class. Instead, metrics such as precision, recall, and F1 score, which take into account the imbalance of the classes, may be more appropriate.

Finally, it is important to carefully consider the underlying causes of the imbalanced dataset in text classification for SEO. Are the imbalances due to a lack of representation in the data, or are they indicative of real-world imbalances in the population? Understanding the root cause of the imbalance can help inform the appropriate approach for handling it.

In summary, imbalanced datasets can present a challenge in text classification for SEO, but there are several approaches that can be taken to effectively handle them. These include oversampling or undersampling the minority or majority class, using weighting or sampling techniques, using ensemble methods, and considering appropriate evaluation metrics. It is also important to consider the underlying causes of the imbalanced dataset in order to inform the appropriate approach.

How Do You Handle Missing or Incomplete Data in Text Classification for SEO?

Missing or incomplete data can be a common issue in text classification tasks, including those related to SEO. There are a few approaches you can take to handle missing or incomplete data in text classification:

- Data imputation: One approach is to try to fill in the missing data with estimates or predictions. For example, you could use a machine learning model to predict the missing values based on the other available data. This can be a useful approach if the missing data is not too large and if there is a clear pattern in the data that can be learned by the model.

- Data deletion: Another option is to simply delete the rows or columns of data that are missing. This can be a good approach if the missing data is not essential to the task at hand or if it represents a small fraction of the overall data.

- Data transformation: In some cases, you may be able to transform the data in a way that allows you to still use it even if it is missing or incomplete. For example, you could use techniques like binning or discretization to convert continuous data into discrete categories, which may allow you to use the data even if it is missing some values.

- Data augmentation: Another option is to try to augment the data by finding additional sources of information that can be used to fill in the missing data. For example, you could use external data sources like web scraping or APIs to supplement the data you have.

- Data synthesis: Finally, you could try synthesizing new data to fill in the missing values. For example, you could use a generative model like a deep learning network to generate new data based on the patterns and trends observed in the existing data.

Ultimately, the best approach for handling missing or incomplete data will depend on the specific context and goals of your text classification task. It may be necessary to try out multiple approaches and see which one works best for your particular situation.

Text Classification in Search Engine Models

Text Classification in Search Engine Models

Text classification is a crucial aspect of search engine models, as it allows for the organization and categorization of text data in a systematic and effective manner. In Market Brew's search engine models, text classification is utilized in various ways to improve the accuracy and efficiency of the modeled semantic algorithms, resulting in more accurate search results and better guidance for SEO teams.

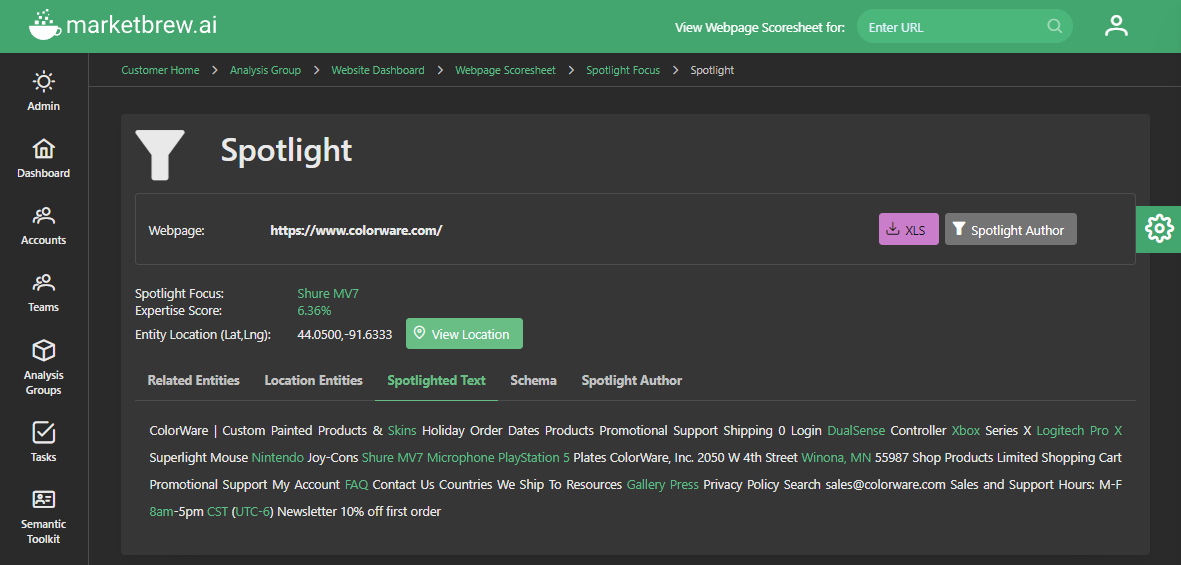

One of the key areas where text classification is used in Market Brew's search engine models is in the Spotlight Algorithm. This algorithm utilizes natural language processing (NLP) techniques to both extract named entities from text and also disambiguate those entities to the knowledge graph.

Named entity disambiguation is the process of determining the correct meaning of a named entity in the context of a given text. This is important for search engines, as it allows for more accurate and relevant search results to be returned to users.

Without text classification, it would be difficult for the Spotlight Algorithm to accurately disambiguate named entities. For example, consider the named entity "Apple." Without text classification, the search engine might not be able to determine whether the "Apple" being referred to is the fruit, the company, or something else entirely.

However, by using text classification techniques, the search engine can analyze the context of the text and accurately determine the correct meaning of the named entity.

In addition to named entity disambiguation, text classification is also used in Market Brew's search engine models for various other purposes. For example, it can be used to classify text data based on its relevance to a particular topic cluster or subject matter. This is important for improving the accuracy of search engine model.

Text classification can also be used to identify spam or inappropriate content, allowing Market Brew's search engine models to filter out such content and provide a more accurate representation of the target search engine.

In addition to these uses, text classification can also be used to identify the sentiment of text data. This is useful for a variety of purposes, such as defining a basket of entities or keywords, embodied in the Spotlight Focus and Market Focus Algorithms respectively.

Overall, text classification plays a vital role in search engine models, and is used in a variety of ways to improve the accuracy and efficiency of the models.

By using text classification techniques, Market Brew's search engine models are able to extract and disambiguate named entities, classify text data based on relevance, identify spam and inappropriate content, and identify the sentiment of text data.

All of these capabilities contribute to the overall effectiveness of search engine models and help to provide users with the most relevant and accurate SEO testing platform available.

You may also like

Guides & Videos

Optimizing For Entity SEO

Guides & Videos

Others

Voice Search Optimization Guide

Guides & Videos