Supervised Learning for Better Search Rankings

Search engines play a crucial role in the modern world, providing users with quick and easy access to information on the internet. However, the accuracy of search engine rankings can often be a concern, as incorrect or irrelevant results can lead to frustration and a poor user experience.

In this article, we explore the use of supervised learning techniques for improving search engine ranking accuracy. We also discuss training search engine models on labeled data and using optimization techniques such as Particle Swarm Optimization to fine-tune search engine models, and improve its ability to mimic the behavior of a target search engine. We discuss the benefits of supervised learning in search engine development and the role of ranking data, feature engineering, and data labeling in the process.

Search engines have become an integral part of our daily lives, providing us with quick and easy access to information on the internet. However, the accuracy of search engine rankings is an important factor in the user experience, as incorrect or irrelevant results can lead to frustration and a lack of trust in the search engine. To improve the accuracy of search engine rankings, companies such as Market Brew have turned to supervised learning techniques to train and fine-tune their search engine models.

Supervised learning involves training a model on labeled data, in which the output (the rankings) is known in advance. By using this type of learning, search engine models are able to learn from the labeled data and make predictions about the rankings of new queries. However, the learning process is not always straightforward and the model may not always produce the desired results. To ensure that the model is optimized and able to accurately mimic the behavior of the target search engine, Market Brew uses Particle Swarm Optimization (PSO) to determine the bias and weight settings for each of the modeled algorithms.

In this article, we will explore the role of supervised learning in search engine development and the benefits it offers in terms of improving ranking accuracy. We will also discuss the importance of ranking data, feature engineering, and data labeling in the process, and how optimization techniques such as PSO can be used to fine-tune search engine models.

What Is Supervised Learning and How Does It Differ from Unsupervised Learning?

Supervised learning is a type of machine learning in which a model is trained to make predictions based on a labeled dataset. This means that the data used to train the model includes both input features and the corresponding correct output labels. The model is then able to use this labeled dataset to make predictions about new, unseen data.

In contrast, unsupervised learning is a type of machine learning in which a model is not given any labeled data and must discover patterns and relationships in the data on its own. The model is not told what the correct output should be for a given input, and must instead rely on its own analysis of the data to make predictions.

One of the key differences between supervised and unsupervised learning is the amount of guidance provided to the model. In supervised learning, the model is given clear guidelines on what the correct output should be for a given input, and is able to use this guidance to make accurate predictions. In unsupervised learning, the model is not given any guidance and must discover patterns and relationships in the data on its own.

Another difference between the two approaches is the type of prediction that is made. In supervised learning, the model is typically trained to make a specific type of prediction, such as classifying an email as spam or non-spam, or predicting the price of a house based on its size and location. In unsupervised learning, the model may be used to identify patterns or clusters in the data, but may not be able to make specific predictions about individual input samples.

One of the primary advantages of supervised learning is that it is typically more accurate and reliable than unsupervised learning. This is because the model is given clear guidelines on what the correct output should be for a given input, and is able to use this guidance to make accurate predictions. In contrast, unsupervised learning can be less accurate, as the model must rely on its own analysis of the data to make predictions, and may not always identify the most relevant patterns or relationships in the data.

There are also some specific situations in which one approach may be more appropriate than the other. Supervised learning is often used when the goal is to make specific predictions about individual input samples, such as classifying emails as spam or non-spam, or predicting the price of a house based on its size and location. Unsupervised learning is often used when the goal is to identify patterns or clusters in the data, or to reduce the dimensionality of the data.

In summary, supervised learning is a type of machine learning in which a model is trained to make predictions based on a labeled dataset, while unsupervised learning is a type of machine learning in which a model is not given any labeled data and must discover patterns and relationships in the data on its own. While supervised learning is typically more accurate and reliable, unsupervised learning is useful in certain situations where the goal is to identify patterns or clusters in the data.

Can You Give an Example of a Common Application of Supervised Learning?

One common application of supervised learning is in the field of image recognition. This involves training a machine learning model on a large dataset of labeled images, such as pictures of dogs and cats. The model is then able to predict the class of a new image based on its features, such as the shape and color of the objects in the image.

For example, imagine that a company has a large database of images of dogs and cats, with each image labeled as either "dog" or "cat." The company could use supervised learning to train a machine learning model to recognize the difference between these two classes of animals.

To do this, the model, often a convolutional neural network, would be fed the labeled images one by one, and it would learn to recognize patterns in the features of the images that are characteristic of either dogs or cats. For example, it might learn that dogs typically have long ears and cats typically have shorter ears, or that dogs tend to have a particular pattern of fur and cats do not.

Once the model has been trained, it can be used to classify new images as either "dog" or "cat" based on these learned patterns. For example, if the model is presented with a new image of a dog, it will recognize the long ears and fur pattern as characteristic of dogs and classify the image as such.

This type of image recognition is used in a variety of applications, including facial recognition systems, automated object detection in security cameras, and even in self-driving cars. In these cases, the machine learning model is able to classify objects in the real world based on the patterns it learned from the labeled images in the training dataset.

Another example of a common application of supervised learning is in the field of natural language processing (NLP). This involves training a machine learning model to understand and generate human language.

For example, a company might want to build a chatbot that can understand and respond to customer inquiries. To do this, they could use supervised learning to train a machine learning model on a large dataset of labeled text conversations. The model would learn to recognize patterns in the words and phrases used in these conversations, and it would be able to generate appropriate responses based on these patterns.

For example, if the model is presented with a customer's question about the availability of a product, it might recognize the words "availability" and "product" as indicative of a request for information and generate a response that provides the desired information.

NLP is used in a wide range of applications, including machine translation, text classification, and sentiment analysis. In these cases, the machine learning model is able to understand and generate human language based on the patterns it learned from the labeled text in the training dataset.

Overall, supervised learning is a powerful tool that is widely used in a variety of applications. It allows machines to learn from labeled data and make predictions or generate responses based on the patterns it learned from this data. Whether it is recognizing images or understanding language, supervised learning is a crucial part of many modern machine learning systems.

How Does Supervised Learning Involve Training and Testing Data Sets?

Supervised learning is a type of machine learning algorithm that involves training a model on a labeled dataset in order to predict an output based on certain inputs.

This means that the data used in the training process is already labeled with the correct output, and the model is trained to recognize patterns in the data and make predictions based on those patterns.

The training and testing data sets are two essential components of the supervised learning process. The training data set is used to train the model, while the testing data set is used to evaluate the model's performance. Let's take a closer look at each of these data sets and how they are used in the supervised learning process.

The training data set is a collection of labeled data points that are used to train the model. This data set is typically quite large and represents a wide range of possible inputs and outputs. The model is trained on this data set by feeding it the input data and corresponding output labels, and adjusting the model's internal parameters to minimize the error between the predicted output and the true output.

The testing data set is a separate collection of labeled data points that are used to evaluate the model's performance. This data set is typically smaller than the training data set, and it represents a subset of the data that the model has not seen before. The model is then fed the testing data and the predicted output is compared to the true output to evaluate the model's accuracy.

There are several reasons why it is important to use both a training and testing data set in the supervised learning process. First, the training data set allows the model to learn patterns in the data and make accurate predictions. Without a training data set, the model would not have any information to base its predictions on.

Second, the testing data set allows us to evaluate the model's performance and determine how well it is able to generalize to new data. If the model is only trained on a single data set, it may perform well on that particular data set but may not be able to generalize well to other data. By using a separate testing data set, we can determine how well the model performs on new data and identify any potential issues with its performance.

There are a few different approaches to dividing the data into training and testing sets. One common approach is to use a fixed ratio, such as 70/30 or 80/20, where a certain percentage of the data is used for training and the remaining percentage is used for testing. Another approach is to use cross-validation, where the data is split into multiple smaller training and testing sets and the model is trained and evaluated multiple times. This can be useful for ensuring that the model is not overfitting to a particular data set.

Overall, the training and testing data sets play a crucial role in the supervised learning process. The training data set is used to train the model and learn patterns in the data, while the testing data set is used to evaluate the model's performance and ensure that it is able to generalize well to new data. By carefully dividing the data into these two sets and using appropriate evaluation metrics, we can ensure that the model is able to accurately predict outputs based on inputs and is not just memorizing the data.

How Is the Performance of a Supervised Learning Model Evaluated?

The performance of a supervised learning model is evaluated through various techniques to determine its accuracy, precision, and effectiveness in solving the problem it was designed to address.

One common method is through the use of a training and testing dataset. The training dataset is used to build and train the model, while the testing dataset is used to evaluate its performance.

The model is given a set of inputs and expected outputs, and its predictions are compared to the actual outputs to determine the accuracy of the model. This process can be repeated multiple times with different datasets to get a better understanding of the model's performance.

Another method is cross-validation, where the dataset is split into multiple subsets and the model is trained and tested on each subset. This helps to reduce the risk of overfitting, where the model performs well on the training data but poorly on new data, as it allows the model to be tested on a variety of data.

Metrics such as confusion matrix, precision, recall, and F1 score are often used to evaluate the performance of a supervised learning model. The confusion matrix is a table that compares the predicted outputs to the actual outputs, showing the number of true positives, true negatives, false positives, and false negatives. Precision measures the proportion of correct predictions out of all predictions made, while recall measures the proportion of correct predictions out of all actual outputs. F1 score combines precision and recall into a single metric, with higher values indicating better performance.

Another important factor to consider when evaluating the performance of a supervised learning model is its ability to generalize to new data. This can be tested through the use of a validation dataset, where the model is given a set of inputs it has not seen before and its predictions are compared to the actual outputs. If the model performs well on the validation dataset, it is more likely to perform well on new data.

In addition to these techniques, it is important to consider the complexity of the model and its ability to be interpretable. A model with a high level of complexity may have a better performance, but it may also be more difficult to interpret and understand the underlying relationships it is modeling. On the other hand, a simpler model may be easier to interpret but may have a lower performance.

Overall, the performance of a supervised learning model is evaluated through a combination of techniques and metrics that measure its accuracy, precision, and ability to generalize to new data. It is important to consider the complexity of the model and its interpretability when evaluating its performance and determining the best solution for a given problem.

What Are Some Common Algorithms Used for Supervised Learning?

Supervised learning is a machine learning technique in which a model is trained on a labeled dataset, which means that the data points have both input and output values.

The model is then used to predict the output for new input data. Supervised learning is useful for a wide range of applications, including image and speech recognition, natural language processing, and predictive analytics.

- Linear Regression: This is a simple and widely used algorithm for supervised learning. It is used to predict a continuous numerical value for a given input data. The model is trained by fitting a linear equation to the training data, which is used to predict the output value for new input data.

- Logistic Regression: This is another simple algorithm that is used to predict a binary outcome (either 0 or 1) for a given input data. It is used in classification tasks, such as spam detection or credit risk analysis. The model is trained by fitting a logistic curve to the training data, which is used to predict the probability of the output being 0 or 1 for new input data.

- Decision Trees: This is a popular algorithm for supervised learning, which is used to predict a categorical outcome for a given input data. The model is trained by building a tree-like structure based on the training data, where each internal node represents a decision based on the value of a particular feature, and the leaf nodes represent the final outcome. The model is used to classify new input data by following the decision tree.

- Random Forests: This is an ensemble learning algorithm that combines the predictions of multiple decision trees to make a final prediction. The model is trained by building a large number of decision trees, each of which is trained on a random subset of the training data. The final prediction is made by taking the average or mode of the predictions of all the decision trees.

- Naive Bayes: This is a probabilistic algorithm that is used to predict a categorical outcome for a given input data. It is based on the Bayes theorem, which states that the probability of an event occurring is based on the prior probabilities of the events and the likelihood of the event given the data. The model is trained by calculating the prior probabilities and the likelihood of each class, which are used to predict the class for new input data.

- K-Nearest Neighbors (KNN): This is a non-parametric algorithm that is used to predict a categorical outcome for a given input data. The model is trained by storing the training data and then finding the K nearest data points for a given input data point, using a K-Means algorithm. The final prediction is made by taking the majority class among the K nearest neighbors.

- Support Vector Machines (SVMs): This is a powerful algorithm for supervised learning that is used to classify data points into two or more categories. The model is trained by finding the hyperplane that maximally separates the data points of different classes. The model is then used to classify new input data by finding the side of the hyperplane on which the data point lies.

- Artificial Neural Networks (ANNs): This is a complex algorithm that is inspired by the structure and functioning of the human brain. It consists of multiple layers of interconnected neurons, which are trained using a variety of techniques such as backpropagation. ANNs are used for a wide range of applications, including image and speech recognition (convolutional neural networks), natural language processing, and predictive analytics.

- Deep Learning: This is a subset of machine learning that uses deep neural networks (networks with many layers) to learn complex patterns in data. It has been successful in many applications, including image and speech recognition, natural language processing, and predictive analytics. The main difference between deep learning and other supervised learning algorithms is the depth of the network and the ability to learn hierarchical representations of data.

- Boosting: This is an ensemble learning algorithm that combines the predictions of multiple weak models to create a strong model. The weak models are typically simple models, such as decision trees, that are trained on different subsets of the training data. The final prediction is made by combining the predictions of all the weak models using a weighted average or majority vote. Boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost.

- Stochastic Gradient Descent (SGD): This is an optimization algorithm that is used to train many supervised learning models, including linear and logistic regression, SVMs, and ANNs. It is based on the idea of iteratively updating the model parameters based on the gradient of the loss function with respect to the parameters. SGD is popular due to its simplicity and efficiency, but it can be sensitive to the initial values of the parameters and may not always converge to the global minimum of the loss function.

- Online Learning: This is a type of supervised learning that involves training a model on a stream of data, where each data point is processed and used to update the model in real-time. Online learning algorithms are useful for applications where the data is too large to be processed all at once, or where the data is changing over time. Examples of online learning algorithms include Perceptron, Logistic Regression, and Adaline.

In summary, there are many algorithms used for supervised learning, which are suitable for different types of data and applications. Some common algorithms include linear and logistic regression, decision trees, random forests, naive Bayes, KNN, SVMs, ANNs, deep learning, boosting, SGD, and online learning. Choosing the right algorithm for a particular problem involves considering the characteristics of the data and the desired performance of the model.

How Does Supervised Learning Handle Missing or Incomplete Data?

Supervised learning is a type of machine learning where a model is trained using labeled data to predict an output based on a set of input features. One common issue that can arise in supervised learning is missing or incomplete data, which can lead to inaccurate predictions and poor model performance.

There are a few different approaches that can be taken to handle missing or incomplete data in supervised learning. One option is to simply ignore any missing data and only use the available data to train the model. This can be effective if the missing data is relatively small and randomly distributed throughout the dataset. However, if the missing data is significant or concentrated in certain areas, this approach can lead to biased or unreliable predictions.

Another option is to use imputation techniques to fill in the missing values. This can be done using methods such as mean imputation, where the missing values are replaced with the mean of the available data for that feature, or using more advanced techniques such as multiple imputation, which uses multiple iterations to estimate the missing values. While imputation can help improve the accuracy of the model, it is important to keep in mind that the imputed values are estimates and may not accurately reflect the true values.

Another approach that can be taken is to use a different algorithm that is better suited to handling missing data. Some algorithms, such as decision trees and random forests, are able to handle missing values by simply ignoring them when making predictions. Other algorithms, such as k-nearest neighbors, can be used to estimate missing values based on the values of similar examples in the dataset.

In addition to these approaches, it is also important to consider the reasons for the missing data. If the missing data is due to a systematic bias or error in the data collection process, addressing this issue at the source can help improve the accuracy of the model. For example, if the missing data is due to a particular group of people not being included in the dataset, it may be necessary to collect additional data to ensure that the model is representative of the full population.

Overall, there are a variety of ways to handle missing or incomplete data in supervised learning. The best approach will depend on the specific circumstances of the dataset and the goals of the model. It is important to carefully consider the limitations and potential biases of each approach and to choose the one that is most appropriate for the given situation.

Can You Describe the Role of Feature Engineering in Supervised Learning?

Feature engineering is a crucial step in the process of supervised learning, which is a type of machine learning that involves training a model on labeled data to predict future outcomes or classify new data.

In this process, feature engineering involves selecting, creating, and modifying the features or variables that will be used to train the model.

The role of feature engineering is to improve the performance of the model by enhancing the relevance and quality of the features. This involves identifying and selecting the most important features that will have the greatest impact on the model's predictions. It also involves creating new features by combining existing features or extracting features from raw data, such as text or images.

One of the main challenges in feature engineering is finding the right balance between including too many or too few features. Including too many features can lead to overfitting, which means the model becomes too complex and performs poorly on new data. On the other hand, including too few features can result in underfitting, which means the model is not able to capture the underlying patterns in the data and has poor performance.

In addition to selecting and creating the right features, feature engineering also involves preprocessing and normalizing the data to make it more suitable for the model. This can include scaling the data to the same range, handling missing values, and encoding categorical variables.

Another important aspect of feature engineering is feature selection, which involves identifying and removing redundant or irrelevant features that do not contribute to the model's predictions. This is important because including unnecessary features can lead to increased complexity and decreased model performance.

There are several techniques that can be used for feature selection, such as filtering methods, which select features based on statistical properties, and wrapper methods, which evaluate the performance of different combinations of features.

Overall, feature engineering plays a crucial role in supervised learning by ensuring that the model has the right features to make accurate predictions or classifications. By carefully selecting and preprocessing the data, and removing unnecessary or irrelevant features, the model can be more effective and accurate in its predictions.

How Does Supervised Learning Handle Multiclass Classification Problems?

Supervised learning is a type of machine learning where a model is trained on a labeled dataset in order to make predictions on new, unseen data. One common task in supervised learning is multiclass classification, where the goal is to classify data points into one of several classes.

Handling multiclass classification problems can be challenging, but there are several approaches that can be used to effectively solve these problems.

One approach to multiclass classification is to use a one-vs-rest (OvR) strategy, also known as one-vs-all. This approach involves training a separate binary classifier for each class, where the classifier is trained to predict whether a data point belongs to the class or not. For example, if there are four classes, four classifiers would be trained, each one attempting to predict whether a data point belongs to class 1, class 2, class 3, or class 4. During the prediction phase, all four classifiers are applied to the new data point and the class with the highest prediction score is chosen as the final prediction.

Another approach to multiclass classification is to use a one-vs-one (OvO) strategy, where a separate binary classifier is trained for every pair of classes. For example, if there are four classes, six classifiers would be trained, each one attempting to predict whether a data point belongs to class 1 or class 2, class 1 or class 3, class 1 or class 4, class 2 or class 3, class 2 or class 4, and class 3 or class 4. During the prediction phase, all six classifiers are applied to the new data point and the class with the most votes is chosen as the final prediction.

Both the OvR and OvO approaches have their advantages and disadvantages. The OvR approach is simpler to implement and requires fewer classifiers to be trained, but it can be less accurate than the OvO approach. The OvO approach, on the other hand, requires more classifiers to be trained, but it can be more accurate as it takes into account the relationship between each pair of classes.

Another approach to multiclass classification is to use a multi-label classifier, which is able to predict multiple classes for each data point. This can be useful in cases where a data point can belong to multiple classes simultaneously. For example, a photo of a person might belong to both the "face" and "outdoor" classes. There are several algorithms that can be used for multi-label classification, such as support vector machines (SVMs) and decision trees.

Finally, there are also algorithms specifically designed for multiclass classification, such as multinomial logistic regression and multi-class linear discriminant analysis (LDA). These algorithms are able to handle multiple classes directly, without the need for the OvR or OvO strategies. However, they can be more complex to implement and may require more data to train effectively.

In conclusion, there are several approaches that can be used to handle multiclass classification problems in supervised learning. The most appropriate approach will depend on the specific characteristics of the dataset and the goals of the model. By understanding the pros and cons of each approach, data scientists can choose the most effective strategy for their particular problem.

How is Supervised Learning Used in Search Engines to Improve the Relevance of Search Results?

Supervised learning is a type of machine learning where the algorithm is trained using labeled data. In the context of search engines, this means that the algorithm is trained using a set of search queries and their corresponding relevant search results.

The algorithm then uses this training data to predict the relevance of search results for future queries.

One way in which supervised learning is used in search engines to improve the relevance of search results is through the use of ranking algorithms. Ranking algorithms are used to determine the order in which search results are displayed to the user. These algorithms use a variety of factors, including the relevance of the search result to the query, the authority and credibility of the website, and the user's previous search history.

One example of a supervised learning algorithm used in ranking is the Support Vector Machine (SVM). SVM algorithms are trained using labeled data, where the search results are labeled as either relevant or not relevant to the query. The algorithm then uses this training data to predict the relevance of search results for future queries.

Another way in which supervised learning is used in search engines is through the use of classifiers. Classifiers are algorithms that are used to classify search results into different categories or groups. For example, a classifier might be used to classify search results as either relevant or not relevant to the query, or as being related to a specific topic or category.

One example of a classifier used in search engines is the Naive Bayes classifier. This algorithm is trained using labeled data, where the search results are labeled with a specific category or topic. The algorithm then uses this training data to predict the category or topic of search results for future queries.

In addition to ranking algorithms and classifiers, supervised learning is also used in search engines through the use of collaborative filtering algorithms. Collaborative filtering algorithms are used to recommend search results to users based on the search history and preferences of other users.

One example of a collaborative filtering algorithm used in search engines is the Matrix Factorization algorithm. This algorithm is trained using labeled data, where the search results are labeled with a specific rating or score. The algorithm then uses this training data to predict the rating or score of search results for future queries.

Overall, supervised learning plays a significant role in improving the relevance of search results in search engines. By using labeled data to train algorithms, search engines are able to accurately predict the relevance of search results and provide users with more accurate and relevant search results. This ultimately leads to a better user experience and increased satisfaction with the search engine.

Can You Discuss the Ethical Considerations of Using Supervised Learning in Search Engines and Other Applications?

Supervised learning is a type of machine learning in which a model is trained on a labeled dataset, meaning that the data has already been labeled with the correct output. This allows the model to make predictions based on the relationships it learns from the data.

Supervised learning is commonly used in search engines and other applications, but there are several ethical considerations to consider when using this type of machine learning.

One ethical consideration is the potential for bias in the data used to train the model. If the data used to train the model is biased, the model will also be biased and may make incorrect or unfair predictions. For example, if a search engine uses a supervised learning model to rank search results, and the training data is predominantly from white, middle-class users, the model may rank results differently for users of different races or socio-economic classes. This could lead to an unfair advantage for some users and a disadvantage for others. To address this issue, it is important to ensure that the training data is representative of the diverse population that will be using the search engine or application.

Another ethical consideration is the potential for supervised learning models to perpetuate existing societal biases. For example, a model that is trained on data from job resumes may be more likely to recommend male candidates for certain positions, even if female candidates are equally qualified. This is because the model has learned from the data that male candidates are more likely to be hired for those positions. To address this issue, it is important to carefully examine the data used to train the model and ensure that it does not perpetuate existing biases.

A third ethical consideration is the potential for supervised learning models to be used for surveillance or other unethical purposes. For example, a model trained on data from social media platforms may be used to monitor and track individuals, or to target them with specific advertisements. To prevent this type of misuse, it is important to establish clear guidelines for the use of supervised learning models and to ensure that they are used ethically and transparently.

Finally, there is the potential for supervised learning models to be used to make decisions that have a significant impact on people's lives. For example, a model trained on data from credit reports may be used to decide whether or not to grant someone a loan. In these cases, it is important to ensure that the model is transparent and that the decision-making process is explainable, so that individuals can understand how the model arrived at its decision and can appeal if they believe the decision is unfair.

In conclusion, there are several ethical considerations to consider when using supervised learning in search engines and other applications. These include the potential for bias in the training data, the potential for the model to perpetuate existing societal biases, the potential for the model to be used for surveillance or other unethical purposes, and the potential for the model to make decisions that have a significant impact on people's lives. To address these issues, it is important to ensure that the training data is representative and diverse, to carefully examine the data used to train the model and ensure that it does not perpetuate existing biases, to establish clear guidelines for the use of supervised learning models, and to ensure that the decision-making process is transparent and explainable.

Supervised Learning in Market Brew

Market Brew is a company that specializes in developing search engine models for in-house SEO teams and world class digital marketing agencies. One of the key features of its search engine models is the ability to mimic the behavior of any search engine in the world. To achieve this, Market Brew uses a combination of supervised learning and optimization techniques to train and fine-tune its search engine model.

The first step in the process is to gather ranking data from a target search engine, such as SEMRush. This data is used to label the output of Market Brew's search engine, providing a reference point for the model to learn from. For example, if the target search engine ranks a particular webpage as the top result for a certain query, the model will be trained to associate that ranking with a high score.

However, the learning process is not always straightforward and the model may not always produce the desired results. To ensure that the model is optimized and able to accurately mimic the target search engine, Market Brew uses Particle Swarm Optimization (PSO) to determine the bias and weight settings for each of the modeled algorithms.

PSO is an optimization technique that works by simulating the behavior of a swarm of particles, each of which represents a potential solution to a problem. The particles move around in a search space and are influenced by their own experience and the experience of their neighbors. By iterating through this process, the particles are able to converge on the optimal solution to the problem.

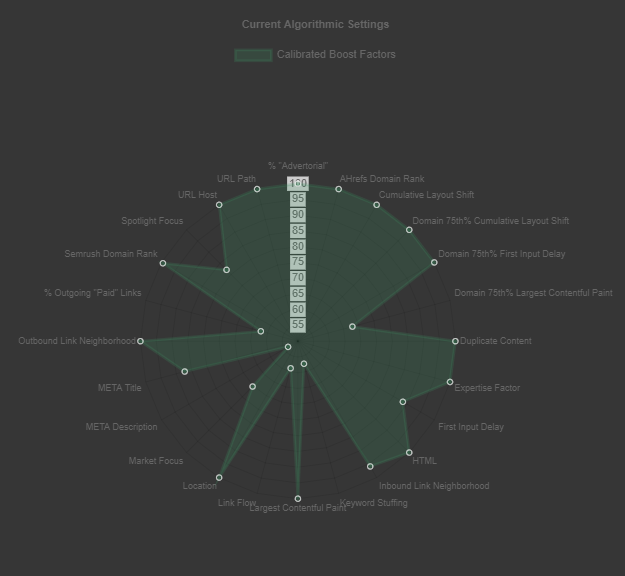

In the case of Market Brew's search engine model, the particles represent different combinations of bias and weight settings for the modeled algorithms. The objective of the optimization process is to find the combination that best matches the ranking data from the target search engine. By using PSO, Market Brew is able to fine-tune the model and ensure that it is able to accurately mimic the behavior of the target search engine.

Once the model has been trained and optimized, it is ready to be inspected like a live search engine. The model is able to take in new changes and produce rankings that are similar to those produced by the target search engine. This allows SEO teams to quickly test their changes and predict search engine rankings.

Overall, Market Brew's use of supervised learning and optimization techniques allows them to develop search engine models that are able to mimic the behavior of any search engine in the world. By using ranking data from a target search engine and employing PSO to fine-tune the model, Market Brew is able to deliver customized search engine models that meet the specific needs of its users.

You may also like

Guides & Videos

Boosting Your Videos With A Video Sitemap

Guides & Videos

Others

From Keywords to Algorithms

Guides & Videos

Others