The Role Of Decision-Making Algorithms In SEO

Decision-making algorithms play a crucial role in search engine optimization (SEO).

In this article, we will explore the various ways in which decision-making algorithms are used to improve search engine rankings, optimize website content and design, and handle technical SEO issues.

We will also delve into the role of user intent, voice search, and social media in the use of decision-making algorithms for SEO purposes.

The use of decision-making algorithms in search engine optimization (SEO) has become increasingly prevalent in recent years. These algorithms are used by search engines to rank websites and determine the relevance of a particular webpage to a given search query.

In order to improve the visibility and ranking of a website, it is important to understand how these algorithms work and how they can be leveraged to optimize a website for SEO purposes.

In this article, we will explore the various ways in which decision-making algorithms are used in SEO, including their role in ranking search results, optimizing website content, and addressing technical SEO issues. We will also examine the impact of user intent, voice search, and social media on the use of decision-making algorithms in SEO.

What Are the Most Common Types of Decision-Making Algorithms Used in SEO?

Decision-making algorithms are used in search engine optimization (SEO) to help determine the ranking of a website in search engine results pages (SERPs). These algorithms take into account a variety of factors to determine the relevance and quality of a website, and they are constantly being updated and refined to improve the accuracy of search results.

There are several common types of decision-making algorithms used in SEO, including:

- PageRank: Developed by Google, PageRank is a proprietary algorithm that uses the number and quality of links pointing to a website as a way to determine its importance and relevance. The more high-quality links a website has pointing to it, the higher it is likely to rank in search results.

- Latent Semantic Indexing (LSI): LSI algorithms are used to analyze the content of a website and identify the relationships between different words and concepts. This helps search engines understand the context and meaning of the content, which can improve the accuracy of search results.

- Keyword-Based Algorithms: These algorithms focus on the specific keywords and phrases used on a website, and use them to determine the relevance and quality of the content. Keywords should be used naturally and in a way that is relevant to the content of the website, as overuse or manipulation of keywords can result in penalties from search engines.

- Artificial Intelligence (AI) and Machine Learning (ML): AI and ML algorithms are becoming increasingly common in SEO, as they can analyze large amounts of data and make decisions based on patterns and trends. These algorithms can help improve the accuracy of search results by identifying relationships between different factors and using that information to rank websites.

- User Behavior Algorithms: These algorithms track the actions of users when they interact with a website, such as how long they stay on the site or whether they click on internal links. This information can be used to help determine the quality and relevance of a website.

In addition to these types of decision-making algorithms, there are also many other factors that can affect the ranking of a website in search results. These include the quality and relevance of the content, the design and usability of the website, and the presence of technical issues such as broken links or slow loading times.

To optimize a website for search engines, it is important to understand how these algorithms work and to focus on providing high-quality, relevant content that meets the needs of users. This includes using keywords effectively, keeping the website up-to-date and user-friendly, and addressing any technical issues that may impact the ranking of the site. By following these best practices, it is possible to improve the ranking of a website in search results and increase its visibility to potential customers.

How Do Decision-Making Algorithms Improve Search Engine Rankings?

Decision-making algorithms are a crucial part of search engines, as they determine the relevance and ranking of search results.

These algorithms are constantly evolving and improving, and play a key role in determining the success of a search engine.

One of the main ways that decision-making algorithms improve search engine rankings is through the use of artificial intelligence and machine learning.

These technologies allow algorithms to analyze and learn from large amounts of data, and to continually improve their ability to understand and interpret user queries. For example, a search engine might use machine learning to analyze the words and phrases that are commonly used in search queries, and to understand the relationships between different words and concepts.

This can help the search engine to better understand the intent behind a user's query, and to deliver more relevant and accurate search results.

Another important factor in decision-making algorithms is the use of relevance and quality signals. These signals help the search engine to understand the value and importance of a particular website or page, and to rank it accordingly. For example, a search engine might use factors such as the number of high-quality links pointing to a website, the relevance of the content on the website to the user's query, and the overall design and user experience of the website. By taking these factors into account, the search engine can improve the ranking of the most relevant and useful websites, and deliver a better search experience to the user.

In addition to using artificial intelligence and relevance signals, decision-making algorithms may also consider other factors when ranking search results. For example, a search engine might prioritize websites that are fast and mobile-friendly, as these are important considerations for many users. The search engine might also consider the location of the user, and tailor the search results to be more relevant to the user's location.

Overall, decision-making algorithms play a crucial role in determining the ranking of search results, and are constantly evolving to improve the search experience for users. By using artificial intelligence and machine learning, as well as considering relevance and quality signals, search engines are able to deliver more relevant and accurate search results to users, which helps to improve the overall effectiveness of the search engine.

Can Decision-Making Algorithms Be Used To Optimize The Content Of A Website For SEO Purposes?

Decision-making algorithms, also known as artificial intelligence algorithms, are computer programs that are designed to mimic the decision-making process of humans. They are often used in a variety of industries, including marketing and advertising, to analyze data and make recommendations based on that analysis.

In terms of optimizing the content of a website for SEO purposes, decision-making algorithms can be used in a number of ways.

For example, they can be used to analyze data about the keywords that users are searching for in search engines, and make recommendations about which keywords should be included in the website's content in order to improve its search engine ranking. They can also analyze data about the user behavior on the website, such as which pages are being visited the most and for how long, and make recommendations about which pages should be prioritized and how they should be structured in order to improve the overall user experience.

One key advantage of using decision-making algorithms for SEO purposes is that they can process large amounts of data much faster than a human could, and they can also identify patterns and trends that may not be immediately obvious to a human. This allows them to make more accurate and effective recommendations about how to optimize the website's content for SEO.

However, it's important to note that decision-making algorithms are not perfect, and they can only make recommendations based on the data that they are given. It's up to the website owner to decide whether or not to follow those recommendations, and to consider other factors such as the overall goals and strategy of the website.

In addition, decision-making algorithms can only analyze data that is available to them, and they cannot make recommendations about things that are outside of their scope of knowledge. For example, if the website owner is targeting a specific audience that the algorithm is not aware of, the algorithm may not be able to make recommendations about how to optimize the content for that audience.

Overall, decision-making algorithms can be a useful tool for optimizing the content of a website for SEO purposes, but they should be used in conjunction with other strategies and tactics, and the website owner should exercise caution and critical thinking when making decisions based on the recommendations of the algorithm.

How Do Decision-Making Algorithms Take Into Account User Intent When Ranking Search Results?

Decision-making algorithms are used by search engines to analyze and process user queries in order to deliver the most relevant and useful results.

In order to do this effectively, these algorithms must be able to understand and interpret the user's intent, which involves determining what the user is trying to accomplish by making the query.

This can be a complex task, as users may have various goals and motivations when making a search, and may use different language and terminology to express their intent.

To take into account user intent when ranking search results, decision-making algorithms use a variety of techniques and strategies. One of the key factors that these algorithms consider is the language used in the query. For example, if a user types in a specific question or request, the algorithm will analyze the words and phrases used in the query to determine the user's intent.

This can include identifying the main topic or subject of the query, as well as any related keywords or terms that may be relevant to the user's goal.

In addition to analyzing the language used in the query, decision-making algorithms also consider the context in which the query is made. For example, if a user is searching for a particular product or service, the algorithm may consider the location of the user and the type of device being used to make the search. This can help the algorithm determine whether the user is looking for local businesses or online retailers, and whether the user is interested in specific features or specifications of the product or service.

Another important factor that decision-making algorithms take into account when ranking search results is the user's previous search history. By analyzing the user's past queries and clicks, the algorithm can get a better understanding of the user's interests and preferences, and use this information to deliver more targeted and relevant results. For example, if a user has previously searched for information about a particular topic or product, the algorithm may prioritize results related to that topic or product in future searches.

In addition to these factors, decision-making algorithms also use various techniques to evaluate the quality and relevance of the search results themselves. This can include analyzing the content of the web pages and assessing the credibility and authority of the sources, as well as considering the user engagement and feedback that the results have received. By using these criteria, the algorithm can determine which results are most likely to be useful and relevant to the user, and prioritize these results in the search rankings.

Overall, decision-making algorithms play a crucial role in helping search engines understand and interpret user intent when ranking search results. By analyzing the language, context, and search history of the user, as well as evaluating the quality and relevance of the results themselves, these algorithms are able to deliver the most useful and relevant results to the user, helping them to achieve their goals and accomplish their tasks more efficiently.

Can Decision-Making Algorithms Be Used To Identify And Fix Technical SEO Issues On A Website?

Decision-making algorithms are a type of computer program that can analyze data and make decisions based on that analysis.

In the context of technical SEO, these algorithms could potentially be used to identify and fix issues on a website that are impacting its search engine rankings.

One way that decision-making algorithms could be used in this context is by analyzing a website's code and structure to identify any problems that may be preventing search engines from properly crawling and indexing the site. For example, if a website has a large number of broken links or redirects, a decision-making algorithm could potentially identify these issues and suggest a plan for fixing them. This could involve updating or deleting the broken links, or adjusting the redirects to ensure that they are functioning properly.

Another way that decision-making algorithms could be used to improve a website's technical SEO is by analyzing the content on the site and identifying any issues that may be impacting its search engine rankings. For example, if a website has a large number of pages with thin or duplicate content, a decision-making algorithm could suggest strategies for improving the quality and uniqueness of this content. This could involve identifying and removing duplicate content, or adding more relevant and valuable information to the pages.

Decision-making algorithms could also be used to analyze a website's performance and identify any technical issues that may be impacting its speed or accessibility. For example, if a website is taking a long time to load or is not mobile-friendly, a decision-making algorithm could suggest strategies for improving these issues. This could involve optimizing images and other large files, or implementing responsive design techniques to ensure that the site is easily accessible on a variety of devices.

Overall, decision-making algorithms have the potential to be a useful tool for identifying and fixing technical SEO issues on a website. While they may not be able to address every issue that a site may be facing, they can provide valuable insights and recommendations that can help to improve a website's search engine rankings and overall performance. It is important to note, however, that while decision-making algorithms can be a useful tool, they are not a substitute for human expertise and should be used in conjunction with other SEO strategies and best practices.

How Do Decision-Making Algorithms Handle Spammy Or Low-quality Websites In Search Results?

Decision-making algorithms, or algorithms that are used to make decisions or recommendations based on certain data or inputs, play a crucial role in determining the quality and relevance of websites that appear in search results.

These algorithms are designed to filter out spammy or low-quality websites and prioritize more reputable and relevant websites.

One way that decision-making algorithms handle spammy or low-quality websites is through the use of spam filters. These filters are designed to identify and block websites that engage in spamming tactics, such as keyword stuffing, link schemes, and cloaking. Spam filters use various techniques, including natural language processing and machine learning, to analyze the content and structure of a website and determine whether it is engaging in spamming activities.

If a website is found to be engaging in spamming, it is flagged and removed from search results.

Another way that decision-making algorithms handle spammy or low-quality websites is through the use of quality signals. Quality signals are metrics that are used to measure the quality and relevance of a website. These signals can include factors such as the authority of the website, the relevance of its content to the search query, the user experience on the website, and the presence of high-quality external links. Algorithms use these quality signals to rank websites in search results, with higher-quality websites appearing higher up in the results.

One way that algorithms determine the authority of a website is through the use of domain authority scores. Domain authority scores are calculated based on a combination of factors, including the age of the domain, the number and quality of external links pointing to the website, and the presence of high-quality content on the website. Websites with higher domain authority scores are generally considered more trustworthy and are ranked higher in search results.

The relevance of a website's content to the search query is another important factor that algorithms consider when ranking websites in search results. Algorithms use natural language processing and machine learning techniques to analyze the content of a website and determine how closely it matches the search query. Websites with more relevant content are ranked higher in search results, while those with less relevant content are ranked lower.

The user experience on a website is also an important factor that algorithms consider when ranking websites in search results. This includes factors such as the loading speed of the website, the usability of the website, and the presence of high-quality content. Websites with a better user experience are generally ranked higher in search results, while those with a poor user experience are ranked lower.

Finally, the presence of high-quality external links pointing to a website is another factor that algorithms consider when ranking websites in search results. These external links are seen as a sign of trust and authority, and websites with more high-quality external links are generally ranked higher in search results.

In summary, decision-making algorithms use a variety of techniques to filter out spammy or low-quality websites and prioritize more reputable and relevant websites in search results. These techniques include the use of spam filters, quality signals such as domain authority scores and the relevance of content, the user experience on the website, and the presence of high-quality external links. By using these techniques, algorithms are able to provide users with more relevant and trustworthy search results, helping them to find the information they need more efficiently and effectively.

Can Decision-Making Algorithms Be Used To Personalize Search Results For Individual Users?

Decision-making algorithms can be used to personalize search results for individual users by analyzing a variety of data points to understand their preferences, search history, and behavior.

These algorithms can then use this information to tailor the search results to better meet the specific needs and interests of each user.

One way that decision-making algorithms can be used to personalize search results is by analyzing the user's search history. By tracking the terms and phrases that a user has searched for in the past, the algorithm can gain a better understanding of their interests and preferences. For example, if a user frequently searches for information about a particular topic, such as sports or fashion, the algorithm can prioritize search results related to that topic when the user conducts a search.

In addition to analyzing search history, decision-making algorithms can also use other data points to personalize search results. These data points may include the user's location, their browsing history, and their social media activity. By analyzing this information, the algorithm can better understand the user's interests and tailor the search results to reflect those interests.

Another way that decision-making algorithms can be used to personalize search results is by using machine learning techniques. These techniques allow the algorithm to continually learn and adapt based on the user's behavior and preferences. For example, if a user consistently clicks on search results related to a particular topic, the algorithm can begin to prioritize results related to that topic more highly in the future.

However, there are some limitations to using decision-making algorithms to personalize search results. One limitation is that the algorithm may not always accurately reflect the user's interests and preferences. For example, if a user conducts a search for a term that they are unfamiliar with, the algorithm may not be able to accurately predict what type of results the user is looking for.

Another limitation is that decision-making algorithms can be biased. This can occur if the algorithm is trained on data that is not representative of the overall population. For example, if the algorithm is trained on data from a group of users who are predominantly interested in a particular topic, it may not accurately reflect the interests and preferences of users who are not interested in that topic.

Despite these limitations, decision-making algorithms can be a useful tool for personalizing search results for individual users. By analyzing a variety of data points and using machine learning techniques, these algorithms can provide users with search results that are tailored to their specific needs and interests. This can help users find the information they are looking for more quickly and easily, improving the overall search experience.

How Do Decision-making Algorithms Handle The Increasing Use Of Voice Search And Natural Language Processing In SEO?

Decision-making algorithms are designed to analyze and interpret data in order to make decisions or provide recommendations. In the context of search engine optimization (SEO), these algorithms are used to determine the relevance and quality of a website or webpage in relation to a specific search query.

With the increasing use of voice search and natural language processing (NLP) in SEO, these algorithms must be able to effectively handle and interpret spoken queries and understand the context and intent behind them.

One of the key challenges in handling voice search and NLP in SEO is the potential for variations in language and phrasing. While traditional search queries are typically short and specific, voice search queries may be more conversational and use more natural language. For example, a user may ask "Where can I find a good Italian restaurant near me?" rather than typing "Italian restaurant near me." Decision-making algorithms must be able to interpret these variations in language and understand the intent behind the query in order to provide relevant results.

To effectively handle voice search and NLP in SEO, decision-making algorithms must be able to understand the context and meaning behind a query. This involves not only analyzing the words used in the query, but also considering factors such as the location and preferences of the user. For example, if a user asks for a "good Italian restaurant near me," the algorithm must be able to understand that the user is looking for an Italian restaurant in their current location and consider their preferences for quality in order to provide relevant results.

In addition to understanding the context and meaning behind a query, decision-making algorithms must also be able to interpret and understand the intent behind the query. This involves determining the goal or objective of the user, such as whether they are looking for information, directions, or to make a purchase. By understanding the intent behind a query, decision-making algorithms can provide more relevant and accurate results.

To handle the increasing use of voice search and NLP in SEO, decision-making algorithms must also be able to effectively analyze and interpret spoken queries. This involves using speech recognition technology to convert spoken words into text, and then analyzing the text using NLP techniques to understand the meaning and intent behind the query.

One approach to handling voice search and NLP in SEO is to use machine learning algorithms, which can be trained to recognize patterns and make decisions based on data inputs. By continuously learning and adapting, these algorithms can improve their ability to understand and interpret spoken queries and provide more relevant results over time.

Another approach is to use a combination of traditional decision-making algorithms and NLP techniques, such as natural language generation and natural language understanding. These techniques allow decision-making algorithms to analyze and understand the meaning and intent behind spoken queries, and to generate responses or recommendations in natural language.

Overall, the increasing use of voice search and NLP in SEO presents a number of challenges and opportunities for decision-making algorithms. By effectively handling and interpreting spoken queries and understanding the context and intent behind them, these algorithms can provide more relevant and accurate results for users and improve the overall user experience.

Can Decision-Making Algorithms Be Used To Optimize The Design And User Experience Of A Website For SEO Purposes?

Decision-making algorithms can be used to optimize the design and user experience of a website for SEO purposes by analyzing user data and making informed decisions based on that data.

These algorithms can be used to determine the best layout, content, and design elements for a website to improve its search engine ranking and attract more users.

One way decision-making algorithms can be used to optimize a website for SEO is by analyzing user behavior and determining which pages and content are most popular. Based on this data, the algorithm can suggest changes to the website layout and navigation to make it easier for users to find the content they are looking for. For example, if a website has a high bounce rate on certain pages, the algorithm may suggest adding internal links to those pages to keep users on the site longer and improve the website's overall user experience.

Decision-making algorithms can also be used to optimize a website's content for SEO purposes by analyzing the keywords and phrases used by users in search queries. Based on this data, the algorithm can suggest changes to the website's content to include these keywords and phrases in a natural and effective way. This can help improve the website's search engine ranking and attract more qualified traffic.

In addition to analyzing user data, decision-making algorithms can also be used to optimize a website's design for SEO purposes. For example, the algorithm can analyze the website's loading speed and suggest changes to the website's code or design elements to improve the speed and overall user experience. This can be especially important for mobile users, as slow loading times can lead to high bounce rates and a poor user experience.

Overall, decision-making algorithms can be a powerful tool for optimizing a website for SEO purposes. By analyzing user data and making informed decisions based on that data, these algorithms can help improve the website's search engine ranking, attract more qualified traffic, and improve the overall user experience. However, it is important to note that decision-making algorithms should be used in conjunction with other SEO strategies, such as keyword research and link building, in order to achieve the best results.

How Do Decision-Making Algorithms Factor In The Role Of Social Media And Backlinks In SEO?

Decision-making algorithms play a significant role in determining the relevance and ranking of websites in search engine results pages (SERPs).

These algorithms consider various factors, including social media and backlinks, to determine the overall quality and credibility of a website.

Social media is a powerful tool that can greatly influence the visibility and reputation of a website. Search engines recognize the importance of social media and use it as a ranking factor in their algorithms. Social signals, such as likes, shares, and comments, can indicate the popularity and relevance of a website to users. The more social media activity a website generates, the more likely it is to rank higher in search results.

One way decision-making algorithms factor in the role of social media in SEO is by analyzing the social media profiles of a website. This includes the number of followers, the frequency of posts, and the engagement level of the posts. Websites with active and well-maintained social media profiles are more likely to rank higher in search results.

Another way decision-making algorithms consider social media in SEO is through backlinks. Backlinks, also known as inbound links, are links from other websites that lead to a specific webpage. Search engines view backlinks as a sign of trust and authority. The more backlinks a website has, the more credible it appears to search engines.

However, not all backlinks are created equal. Decision-making algorithms take into account the quality and relevance of backlinks. Links from high-quality and relevant websites carry more weight than links from low-quality or unrelated websites. For example, a backlink from a reputable news source would be more valuable than a backlink from a spammy website.

In addition to the quality and relevance of backlinks, decision-making algorithms also consider the anchor text of the links. Anchor text is the visible, clickable text in a hyperlink. Anchor text can help search engines understand the context and relevance of the linked webpage.

Therefore, using relevant and descriptive anchor text in backlinks can improve a website's ranking in search results.

Decision-making algorithms also consider the number of backlinks a website has. While having a large number of backlinks can improve a website's ranking, it is important to note that quality trumps quantity. A website with a few high-quality backlinks will rank higher than a website with a large number of low-quality backlinks.

In conclusion, decision-making algorithms factor in the role of social media and backlinks in SEO to determine the relevance and quality of a website. Social media activity and high-quality backlinks from reputable and relevant websites can significantly improve a website's ranking in search results. It is important for websites to maintain an active and well-maintained social media presence and to focus on acquiring high-quality backlinks to improve their SEO.

Modeling Decision-Making Algorithms In Market Brew

Modeling Decision-Making Algorithms In Market Brew

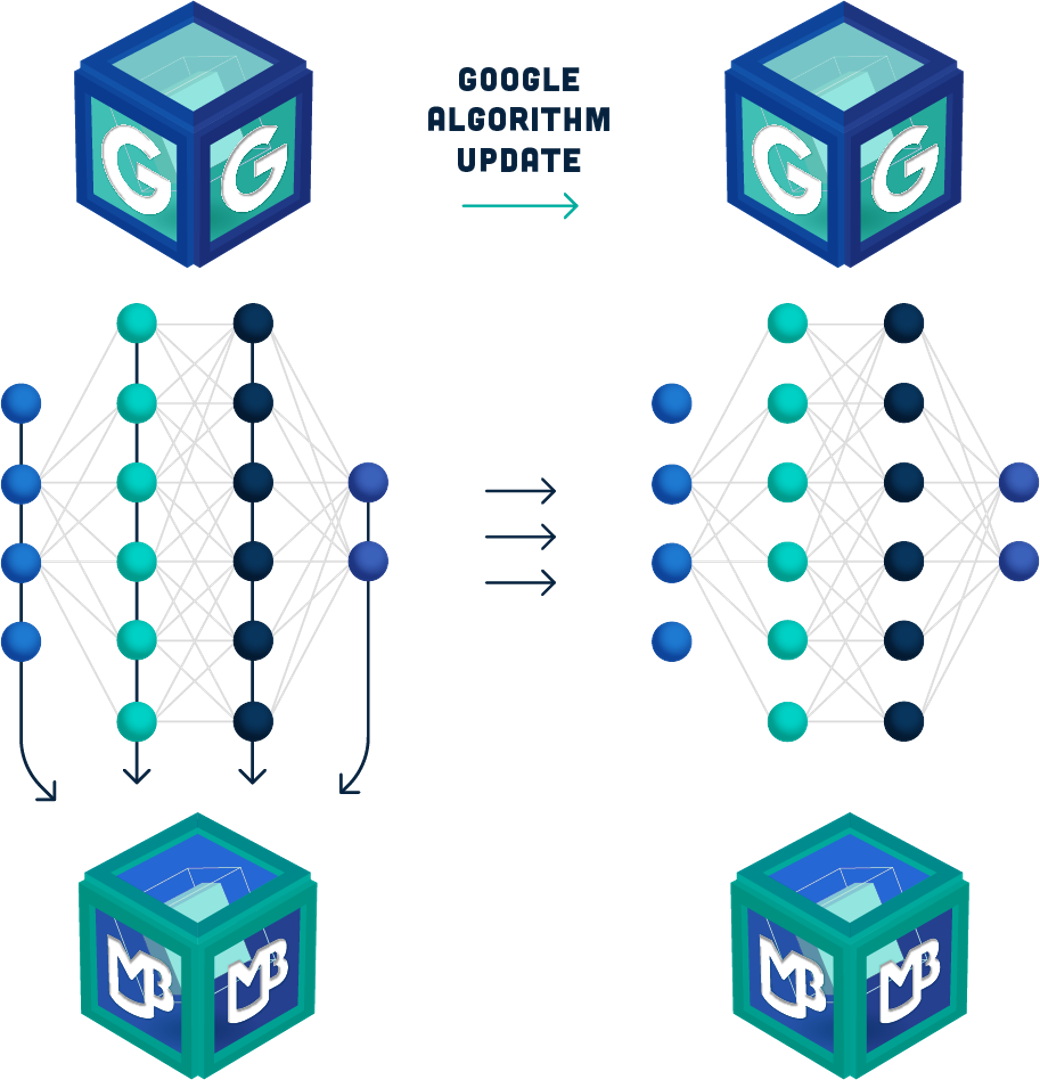

Market Brew is a SEO software company that specializes in developing search engine models for businesses and organizations. These models are designed to mimic the algorithms that are used by real search engines, such as Google, in order to provide accurate and relevant search results.

One of the key technologies that Market Brew uses in its search engine models is decision-making algorithms. These algorithms are used to analyze and interpret data in order to make informed decisions about how to take a huge list of SEO tasks and prioritize them based on the competitive landscape in each search engine results page (SERP).

One of the main decision-making algorithms that Market Brew uses is Particle Swarm Optimization (PSO). This algorithm is based on the idea of a group of particles moving through a solution space in search of the optimal solution. In the context of search engine modeling, the particles represent different combinations of bias and weight settings for the modeled algorithms. By adjusting these settings, Market Brew is able to emulate the behavior of the target search engine and provide accurate search results.

PSO is a highly effective decision-making algorithm for a number of reasons.

First, it is able to quickly search through a large solution space to find the optimal solution of bias and weights for each of its algorithms that it is modeling. This is important for search engine models, which need to be able to process and analyze large amounts of data in order to provide an accurate picture of the target search engine.

Second, PSO is able to adapt to changing conditions, which means that it can continue to provide an accurate search engine model even if the target search engine changes its algorithms or introduces new data sources.

Another key aspect of Market Brew's search engine models is their ability to learn and adapt over time. This is made possible by the use of machine learning algorithms, which allow the models to analyze data and make decisions based on that analysis.

By continuously learning and adapting, the search engine models are able to provide increasingly accurate search results over time. This is important for businesses and organizations that rely on search engines to connect with customers and clients, as it ensures that they are able to provide relevant and useful information to their users.

In addition to the decision-making algorithms that are used by Market Brew's search engine models, there are also a number of other technologies that are employed in order to provide accurate modeling of content based algorithms, including natural language processing, which allows the models to understand and interpret queries written in natural language, and artificial intelligence, which enables the models to make complex decisions and judgments based on data analysis.

By leveraging these technologies, Market Brew is able to provide highly accurate search engine models for its clients.

In conclusion, decision-making algorithms are a key component of Market Brew's search engine models.

By using algorithms such as PSO, Market Brew is able to adjust the bias and weight settings of its models to emulate the target search engine and provide accurate search results.

In addition, the use of machine learning and artificial intelligence enables the models to adapt and improve over time, ensuring that they are able to provide relevant and useful information to their users.

Overall, the use of decision-making algorithms is essential for the success of Market Brew's search engine models and the satisfaction of its clients.

Ready to Take Control of Your SEO?

See how Market Brew's predictive SEO models and expert team can unlock new opportunities for your site. Get tailored insights on how we can help your business rise above the competition.

Schedule a demonstration today via our Menu Button and Contact Form to discover how we engineer SEO success.

You may also like

Guides & Videos

Ultimate Guide to Multilingual SEO

History

Google Core Algorithm History

Guides & Videos