Optimizing Crawl Budget For Large Websites

Crawl budget is a critical aspect of website SEO that directly impacts how search engines crawl and index a website's pages.

In this article, we will explore the concept of crawl budget, the factors that affect it, and the best practices for optimizing it for improved search engine results. We will also discuss how to monitor and track crawl budget, fix broken links and 404 errors, and use robots.txt and sitemaps to improve crawl budget.

Additionally, we will cover common mistakes that can harm crawl budget and tips for increasing it by enhancing content and structure.

Search engine optimization (SEO) is a complex and ever-changing field that requires constant attention and adjustments to stay on top of the latest trends and best practices. One of the most critical aspects of SEO is crawl budget, which refers to the amount of resources that search engines allocate to crawling and indexing a website's pages.

Crawl budget plays a crucial role in how well a website ranks in search engine results pages (SERPs) and how often its pages are updated.

In this article, we will dive deep into the world of crawl budget and explore the various ways to optimize it for improved SEO. We will also discuss common mistakes and how to avoid them, as well as tips and tricks to boost crawl budget and increase visibility in SERPs.

What Is Crawl Budget And How Does It Affect Website SEO?

Crawl budget is a term that refers to the amount of resources that search engines allocate to crawling and indexing a website's pages.

It is a critical aspect of website SEO that directly impacts how well a website ranks in search engine results pages (SERPs) and how often its pages are updated. In this article, we will explore the concept of crawl budget and how it affects website SEO.

When a search engine crawls a website, it follows links and indexes the pages it finds. The amount of resources that a search engine devotes to crawling a website is referred to as its crawl budget. Crawl budget is determined by several factors, including the number of pages on a website, the number of links pointing to a website, and the rate at which a website is updated.

The more pages a website has, the more resources a search engine will need to crawl it. Additionally, the more links pointing to a website, the more likely it is that a search engine will crawl it. Websites that are updated frequently are also more likely to be crawled frequently.

Crawl budget is important because it affects how often search engines crawl and index a website's pages. If a website has a high crawl budget, it is more likely to be crawled and indexed frequently, which can lead to higher rankings in SERPs. Conversely, if a website has a low crawl budget, it may be crawled and indexed infrequently, which can lead to lower rankings in SERPs.

One way to optimize crawl budget is by fixing broken links and 404 errors. Broken links and 404 errors can cause search engines to waste resources crawling pages that do not exist, which can lead to a lower crawl budget. By fixing these issues, a website can increase its crawl budget and improve its chances of being crawled and indexed frequently.

Another way to optimize crawl budget is by using robots.txt and sitemaps. Robots.txt is a file that tells search engines which pages on a website should be crawled and which should not. Sitemaps are an XML file that provides a list of all the pages on a website and their priority. By using these tools, a website can guide search engines to the most important pages and increase its crawl budget.

It's also important to use analytics and webmaster tools to measure crawl budget. Google Analytics and Google Search Console are two popular tools that can be used to monitor a website's crawl budget. These tools provide detailed information on how often a website is crawled and indexed, which can help identify any issues that may be affecting crawl budget.

In conclusion, crawl budget is an important aspect of website SEO that directly impacts how well a website ranks in SERPs and how often its pages are updated. To optimize crawl budget, website owners should fix broken links and 404 errors, use robots.txt and sitemaps, and use analytics and webmaster tools to measure crawl budget. By understanding and managing crawl budget, website owners can improve their chances of being crawled and indexed frequently, which can lead to higher rankings in SERPs.

How Can I Optimize My Website's Crawl Budget For Improved Search Engine Results?

Optimizing a website's crawl budget is an important step in improving search engine results. Crawl budget refers to the amount of resources, such as time and bandwidth, that search engines allocate to visiting and indexing a website.

By optimizing a website's crawl budget, a business can ensure that their website is being visited and indexed by search engines as often as possible, which can lead to better search engine rankings and more visibility.

One of the first steps in optimizing a website's crawl budget is to ensure that the website is easily accessible to search engines. This can be done by ensuring that the website is properly indexed by search engines, which can be done by submitting the website to search engines and by creating a sitemap that is easy for search engines to navigate. Additionally, it is important to ensure that the website is free from broken links, which can make it difficult for search engines to navigate the website.

Another important step in optimizing a website's crawl budget is to ensure that the website is structured in a way that is easy for search engines to understand. This can be done by using clear and concise headings and by including meta tags that provide information about the website's content. Additionally, it is important to use keywords throughout the website, as this will make it easier for search engines to understand the website's content.

In addition to optimizing the website's structure, it is also important to ensure that the website's content is high-quality and relevant. This can be done by creating unique and high-quality content that is relevant to the target audience. Additionally, it is important to update the website's content regularly, as this will help search engines to understand that the website is still active and relevant.

Another important step in optimizing a website's crawl budget is to ensure that the website is properly optimized for mobile devices. With the increasing popularity of mobile devices, it is important to ensure that the website is easily accessible and readable on mobile devices. This can be done by using responsive design, which will ensure that the website is properly optimized for mobile devices.

Finally, it is important to monitor the website's crawl budget and make adjustments as needed. This can be done by using tools such as Google Search Console, which will provide information about how often the website is being visited by search engines. If the website is not being visited as often as desired, it is important to make adjustments to the website's structure and content in order to improve the crawl budget.

In conclusion, optimizing a website's crawl budget is an important step in improving search engine results. By ensuring that the website is easily accessible, structured in a way that is easy for search engines to understand, and contains high-quality and relevant content, businesses can ensure that their website is being visited and indexed by search engines as often as possible. Additionally, it is important to ensure that the website is properly optimized for mobile devices and to monitor the website's crawl budget and make adjustments as needed. By following these steps, businesses can improve their search engine rankings and increase their visibility on the internet.

What Are The Factors That Affect A Website's Crawl Budget?

A website's crawl budget refers to the amount of resources (such as time and server capacity) that search engines allocate to crawling and indexing a particular site.

There are several factors that can affect a website's crawl budget, including the size and structure of the site, the number of pages and links, and the use of crawl directives and blocking methods:

- Size and Structure of the Site: The larger a website is, the more resources it will require to crawl and index. This is because larger sites typically have more pages and links, which search engines need to traverse in order to fully understand the site's content and organization. Additionally, sites with complex or poorly-structured architectures may also require more resources to crawl and index, as search engines may need to spend more time trying to understand the organization of the site.

- Number of Pages and Links: The more pages and links a website has, the more resources it will require to crawl and index. This is because search engines need to follow each link in order to discover new pages and understand the site's overall structure. Additionally, sites with a large number of pages and links may also require more resources to crawl and index, as search engines may need to spend more time trying to understand the organization of the site.

- Crawl Directives and Blocking Methods: Websites can use various methods to control how search engines crawl and index their content. For example, using the robots.txt file, website owners can block search engines from crawling certain sections of their site. Additionally, using the "noindex" meta tag, website owners can prevent search engines from indexing certain pages. While these methods can help conserve crawl budget, they can also limit the visibility of a site's content in search results.

- Site Speed: Websites that load faster will be crawled more frequently as search engines look to provide a good user experience to their users. Slow loading websites may be crawled less frequently as search engines will spend more time crawling the fast loading sites.

- Crawl Errors: Crawl errors on a website can also affect the crawl budget. Search engines may not spend as much time crawling a site with a high number of crawl errors, as it may not be seen as a valuable resource.

- Link popularity: Websites with more external links pointing to them will be crawled more frequently than sites with fewer external links. This is because search engines see these sites as more valuable and relevant to users.

In conclusion, a website's crawl budget is affected by a variety of factors, including the size and structure of the site, the number of pages and links, the use of crawl directives and blocking methods, site speed, crawl errors and link popularity. By understanding how these factors can impact a site's crawl budget, website owners can take steps to ensure that their site is crawled and indexed as efficiently as possible, while also maximizing its visibility in search results.

How Can I Monitor And Track My Website's Crawl Budget?

Monitoring and tracking your website's crawl budget is essential for ensuring that search engines are able to effectively index and rank your site. The crawl budget refers to the amount of resources that a search engine is willing to allocate to crawling and indexing your site.

It is important to monitor and track your crawl budget because it can impact your website's visibility in search engine results pages (SERPs) and ultimately your overall traffic and revenue.

One way to monitor and track your crawl budget is to use Google Search Console. This is a free tool provided by Google that allows you to see how often your site is being crawled, the pages that are being crawled, and any errors that may be preventing your site from being indexed properly. You can also use this tool to submit sitemaps and request that specific pages be indexed.

Another way to monitor and track your crawl budget is to use website analytics tools such as Google Analytics or Adobe Analytics. These tools can provide you with data on how many pages on your site are being viewed and how often. This information can help you identify areas of your site that are not being crawled or indexed properly and take steps to improve them.

Additionally, you can use a crawl budget optimization tool such as Screaming Frog or Botify to monitor and track your crawl budget. These tools can provide you with detailed information on how your site is being crawled, including the number of pages crawled, the time it takes to crawl each page, and any errors that are preventing your site from being indexed properly.

To optimize your crawl budget, it is important to ensure that your site is well-organized and easy for search engines to crawl. This includes having a clear and easy-to-use navigation structure, using relevant keywords in your URLs and meta tags, and ensuring that your site is mobile-friendly.

Another important factor to consider is the number of links on your site. Having a large number of links can increase the number of pages that need to be crawled, which can impact your crawl budget. It is important to regularly review your site's links and remove any that are no longer relevant or that point to broken pages.

In addition, you should also ensure that your site is free from any technical errors such as broken links, 404 errors, and redirects. These errors can prevent search engines from crawling and indexing your site properly, which can impact your crawl budget and ultimately your visibility in SERPs.

Finally, it is important to keep in mind that your crawl budget is not a fixed number and can change depending on various factors such as the size of your site, the number of pages on your site, and the number of links pointing to your site. It is important to regularly monitor and track your crawl budget in order to make any necessary adjustments and ensure that your site is being indexed and ranked properly by search engines.

In conclusion, monitoring and tracking your website's crawl budget is an essential part of ensuring that your site is properly indexed and ranked by search engines. There are several tools and techniques that can be used to monitor and track your crawl budget, including Google Search Console, website analytics tools, and crawl budget optimization tools. Additionally, it is important to ensure that your site is well-organized, easy to crawl, and free from technical errors in order to optimize your crawl budget. By regularly monitoring and tracking your crawl budget, you can improve your site's visibility in SERPs and ultimately increase your traffic and revenue.

How Can I Improve My Website's Crawl Budget By Fixing Broken Links And 404 Errors?

One of the most important aspects of maintaining a website is ensuring that it is easily accessible and navigable for both users and search engines.

One way to improve a website's crawl budget, which is the amount of resources that search engines allocate to crawling and indexing a website, is by fixing broken links and 404 errors.

Broken links are links on a website that lead to pages that no longer exist or cannot be accessed. These links can be frustrating for users, as they often lead to a "404 Not Found" error page. Additionally, broken links can harm a website's search engine optimization (SEO) by making it harder for search engines to crawl and index the site.

404 errors occur when a user requests a page that cannot be found on a website. This can happen for a variety of reasons, including broken links, mistyped URLs, or pages that have been deleted or moved. Like broken links, 404 errors can be frustrating for users and can harm a website's SEO.

To improve a website's crawl budget by fixing broken links and 404 errors, there are a few steps that can be taken:

- Identify and fix broken links: One of the first steps in fixing broken links is to identify them. This can be done manually by checking the website's links one by one, or by using a tool such as Ahrefs or SEMrush. Once broken links have been identified, they can be fixed by either redirecting them to a relevant page or by removing them altogether.

- Implement a 301 redirect: A 301 redirect is a permanent redirect that tells search engines that a page has moved to a new location. This is useful for fixing broken links and 404 errors, as it ensures that users and search engines are directed to the correct page.

- Use a custom 404 error page: A custom 404 error page can be used to provide users with more information about why a page cannot be found and to suggest alternative pages on the website. This can help to reduce the number of 404 errors that occur and can improve the user experience.

- Monitor for broken links and 404 errors: It is important to regularly monitor for broken links and 404 errors on a website. This can be done manually or by using a tool such as Google Search Console. Monitoring for broken links and 404 errors can help to identify and fix issues quickly, which can improve a website's crawl budget.

- Use a sitemap: A sitemap is a file that lists all of the pages on a website and can be submitted to search engines to help them understand the structure of a website. This can help to reduce the number of broken links and 404 errors on a website, as it makes it easier for search engines to crawl and index the site.

By taking the steps outlined above, it is possible to improve a website's crawl budget by fixing broken links and 404 errors. This can help to improve the user experience, increase the number of pages that are indexed by search engines, and ultimately, boost a website's search engine rankings. It is important to regularly monitor and maintain a website to ensure that it remains easily accessible and navigable for both users and search engines.

What Are The Best Practices For Managing Crawl Budget On A Large Website?

Managing crawl budget on a large website can be a daunting task, but with the right strategy and best practices in place, it can be done effectively. A crawl budget refers to the number of pages that a search engine will crawl and index on a website.

It is determined by the search engine algorithm and is based on factors such as the website's size, structure, and the amount of content it contains.

One of the best practices for managing crawl budget on a large website is to ensure that the website's structure is clear and easy to navigate. This means that all of the pages on the website should be organized in a logical and hierarchical manner, making it easy for search engines to crawl and index the content. Additionally, the website should have a sitemap and a robots.txt file that clearly define the pages that should and should not be crawled.

Another important best practice is to keep the website's content fresh and up-to-date. Search engines tend to crawl and index pages that have recently been updated more frequently than those that have not. Therefore, regularly updating the website's content can help to ensure that it is being crawled and indexed more frequently. Additionally, it is important to remove any duplicate content or low-quality pages that do not add value to the website. This will help to ensure that the crawl budget is being used effectively, and that the search engines are focusing on the most important and relevant pages on the website.

Additionally, it is essential to monitor the website's crawl stats to understand how the search engines are crawling the website. Google Search Console is a great tool that can be used to track the number of pages that are being crawled and indexed, as well as any crawl errors that may be occurring. By monitoring this data, it is possible to identify any issues that may be impacting the crawl budget and take steps to address them.

Another important best practice is to make sure that the website is mobile-friendly and responsive. With the increasing number of people accessing the internet on mobile devices, it is essential that websites are optimized for mobile. This will not only help to improve the user experience but also ensure that the website is being crawled and indexed correctly by search engines.

Lastly, it is important to use internal linking effectively. Internal linking helps search engines understand the structure and hierarchy of the website, making it easier for them to crawl and index the pages. By linking to important pages on the website, it is possible to ensure that these pages are being crawled and indexed more frequently, which can help to improve the crawl budget.

In conclusion, managing crawl budget on a large website can be a challenging task, but with the right strategy and best practices in place, it can be done effectively. By ensuring that the website's structure is clear and easy to navigate, keeping the content fresh and up-to-date, monitoring the website's crawl stats, making the website mobile-friendly and responsive, and using internal linking effectively, it is possible to ensure that the crawl budget is being used effectively and that the search engines are focusing on the most important and relevant pages on the website.

How Can I Use Robots.txt And Sitemaps To Improve My Website's Crawl Budget?

A crawl budget is the amount of time and resources that search engines allocate to crawling and indexing a website. A website's crawl budget can be affected by several factors, such as the number of pages on a site, the frequency of updates, and the quality of the site's structure and content. To improve a website's crawl budget, it is important to use tools such as robots.txt and sitemaps.

The robots.txt file is a simple text file that is placed in the root directory of a website. It tells search engines which pages or sections of a site should not be crawled or indexed. By using robots.txt, webmasters can prevent search engines from wasting resources on pages that are not important or relevant to the site. For example, a website that has a large number of duplicate pages or pages that are not intended for public viewing can use robots.txt to block search engines from crawling those pages. This will improve the crawl budget by ensuring that search engines are only focusing on the most important and relevant pages on the site.

Sitemaps are another important tool for improving a website's crawl budget. A sitemap is a file that lists all of the pages on a website and provides information about each page, such as the date it was last updated and the frequency of updates. By using a sitemap, webmasters can provide search engines with a clear and concise overview of the site's structure and content. This makes it easier for search engines to understand the site and prioritize which pages to crawl and index.

In addition to sitemaps, there is also the option of using an XML sitemap. This type of sitemap is used specifically for search engines and provides more detailed information about each page, such as its priority and the frequency of updates. This can help search engines to better understand the site and determine which pages to crawl and index.

Another way to improve a website's crawl budget is to optimize the site's structure and content. This includes ensuring that the site is well-organized and easy to navigate. This makes it easier for search engines to crawl and index the site, and also improves the user experience. Additionally, having high-quality, unique, and informative content can also help to improve a website's crawl budget. Search engines are more likely to crawl and index pages with high-quality content, and this can lead to better visibility in search results.

In summary, robots.txt and sitemaps are two important tools that can be used to improve a website's crawl budget. By using robots.txt to block search engines from crawling irrelevant pages, and by using sitemaps to provide search engines with a clear overview of the site's structure and content, webmasters can ensure that search engines are focusing on the most important and relevant pages. Additionally, optimizing the site's structure and content can also improve a website's crawl budget, leading to better visibility in search results.

How Can I Use Analytics And Webmaster Tools To Measure My Website's Crawl Budget?

Analytics and webmaster tools are essential tools that can be used to measure a website's crawl budget. A crawl budget is the number of pages that a search engine bot can crawl and index on a website within a given period of time. This is important because search engines use this information to determine how often they should crawl a website and how many pages they should index.

Understanding and managing a website's crawl budget can help to improve its visibility and ranking in search engine results pages (SERPs).

One of the ways to measure a website's crawl budget is through the use of analytics tools such as Google Analytics. Google Analytics allows website owners to track the number of pages that are being crawled by search engines, as well as the amount of traffic that is being generated from those crawls. This information can be used to determine if a website is being crawled enough, or if there are any issues that may be preventing search engines from crawling all of the pages on a website.

Another way to measure a website's crawl budget is through the use of webmaster tools such as Google Search Console. Google Search Console allows website owners to see which pages are being indexed by search engines and which pages are not. This information can be used to identify any issues that may be preventing search engines from crawling certain pages, such as broken links or redirects. Additionally, Google Search Console also provides information on the number of crawl errors that are occurring on a website, which can be used to identify any technical issues that may be affecting a website's crawl budget.

In addition to these tools, website owners can also use the Robots.txt file to manage their crawl budget. The Robots.txt file is a simple text file that is placed in the root directory of a website and is used to instruct search engines on which pages they should and should not crawl. By using the Robots.txt file, website owners can specify which pages they want search engines to crawl and which pages they do not. This can be useful for preventing search engines from crawling pages that are not relevant to their search results, such as duplicate content or test pages.

Another way to manage a website's crawl budget is through the use of the rel=”nofollow” attribute. This attribute can be added to links on a website to indicate to search engines that they should not follow that link. This can be useful for preventing search engines from crawling pages that are not relevant to their search results, such as affiliate links or sponsored content.

Finally, website owners can also use the rel=”canonical” tag attribute to manage their crawl budget. The rel=”canonical” attribute can be added to pages that are similar to other pages on a website to indicate which page should be indexed by search engines. This can be useful for preventing search engines from crawling duplicate content, which can negatively impact a website's crawl budget.

In conclusion, analytics and webmaster tools are essential tools that can be used to measure a website's crawl budget. By using these tools, website owners can understand how often their website is being crawled by search engines and how many pages are being indexed. This information can be used to identify any issues that may be preventing search engines from crawling all of the pages on a website and to make necessary changes to improve a website's visibility and ranking in search engine results pages (SERPs). Additionally, website owners can also use the R, the rel=”nofollow” attribute, and the rel=”canonical” attribute to manage their crawl budget and improve their website's search engine optimization.

What Are The Common Mistakes That Can Harm My Website's Crawl Budget?

A crawl budget is the amount of resources that a search engine like Google allocates to crawling and indexing a website.

It is determined by a combination of factors including the size of the website, the number of pages, and the frequency of updates.

One common mistake that can harm a website's crawl budget is having duplicate content. Search engines may view this as an indication that the website is not providing unique and valuable information, which can result in a lower crawl budget and poor search engine rankings. To avoid this, website owners should ensure that all content is original and avoid copying and pasting text from other sources.

Another mistake that can harm a website's crawl budget is having a poorly structured website. This includes having a website with a confusing navigation structure, broken links, and missing alt tags. Search engines may have trouble understanding the website and will spend more resources trying to crawl and index it, which can lead to a lower crawl budget. To avoid this, website owners should ensure that their website is well-structured and easy to navigate.

A third mistake that can harm a website's crawl budget is having a large number of low-quality pages. Search engines may view these pages as not providing valuable information, and will allocate fewer resources to crawling and indexing them. This can lead to a lower crawl budget and poor search engine rankings. To avoid this, website owners should focus on creating high-quality, relevant content that is likely to be of value to their target audience.

Another common mistake that can harm a website's crawl budget is not properly handling redirects. Search engines may view redirects as an indication that the website is not providing valuable information, and will allocate fewer resources to crawling and indexing it. To avoid this, website owners should ensure that all redirects are properly handled and that they are using the appropriate redirect codes.

Lastly, not regularly updating your website can also harm your website's crawl budget. Search engines may view a website that is not updated regularly as not providing valuable information, and will allocate fewer resources to crawling and indexing it. To avoid this, website owners should ensure that they are regularly updating their website with new and relevant content.

In conclusion, there are many common mistakes that can harm a website's crawl budget. These include having duplicate content, a poorly structured website, a large number of low-quality pages, not properly handling redirects, and not regularly updating the website. By avoiding these mistakes, website owners can ensure that their website is crawled and indexed efficiently, leading to better search engine rankings and increased visibility.

How Can I Increase My Website's Crawl Budget By Enhancing Its Content And Structure?

Website crawl budget is a critical metric that determines how often and how deeply search engines crawl and index a website's pages.

A website with a high crawl budget will have its pages indexed more frequently and more thoroughly, resulting in higher search engine rankings and more visibility for the website's content.

However, many website owners struggle to increase their crawl budget, which can lead to poor visibility and search engine rankings.

The first step in increasing a website's crawl budget is to enhance its content and structure. Search engines use various signals to determine the relevance and importance of a website's pages, and the quality and relevance of the content on those pages play a major role in this process. Websites with high-quality, relevant, and well-structured content will be given a higher crawl budget than those with low-quality or irrelevant content.

One of the most effective ways to enhance content and structure is to focus on creating high-quality, in-depth, and well-researched articles and blog posts. These types of articles are more likely to be shared and linked to, which will signal to search engines that the content is valuable and relevant. Additionally, it is important to ensure that the content is well-structured, using headings, subheadings, and lists to break up the text and make it more readable.

Another important aspect of content and structure is the use of keywords. Search engines use keywords to determine the relevance of a website's pages, and websites that use relevant keywords in their content and meta tags will be given a higher crawl budget. However, it is important to use keywords in a natural and organic way, as overuse or misuse of keywords can result in penalties from search engines.

In addition to enhancing content and structure, it is also important to improve the overall technical structure of the website. Search engines use various signals to determine the relevance and importance of a website, and technical factors such as page load speed, mobile responsiveness, and the use of structured data can all play a role in the crawl budget. Websites that are fast-loading, mobile-friendly, and use structured data will be given a higher crawl budget than those that do not.

Improving website's internal linking structure is also an effective way to increase crawl budget. Internal links help search engines understand the hierarchy and organization of a website's pages, and websites with a well-structured internal linking structure will be given a higher crawl budget. Additionally, it is important to ensure that broken links are fixed promptly, as search engines may penalize websites with a high number of broken links.

Finally, it is important to monitor and analyze the website's crawl budget using tools such as Google Search Console. This will provide insights into how often and how deeply search engines are crawling the website's pages, and will help identify any issues or areas for improvement. By monitoring and analyzing the crawl budget, website owners can make data-driven decisions about how to improve the website's content and structure, and increase the crawl budget over time.

In conclusion, increasing a website's crawl budget is critical for improving search engine rankings and visibility. By enhancing the website's content and structure, improving technical factors, and monitoring and analyzing the crawl budget, website owners can take steps to increase their crawl budget and improve their search engine rankings over time.

Modeling Crawl Budget With Market Brew

Modeling Crawl Budget With Market Brew

Market Brew's AI SEO software platform is a powerful tool for optimizing crawl budget.

By utilizing its search engine models, users can gain a deep understanding of what a search engine sees when it crawls their website.

This allows them to make informed decisions about how to shape the link flow distribution of their site, which in turn can help to optimize crawl budget.

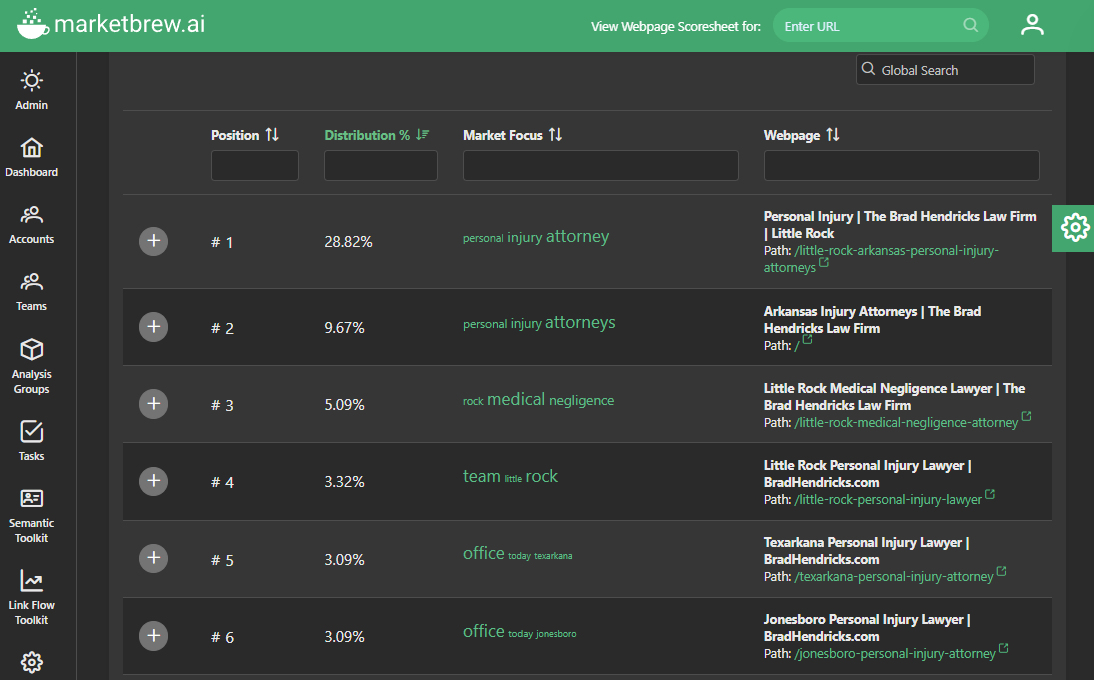

One of the key features of Market Brew's software is its Link Flow Distribution screen. This screen allows users to see the distribution of link flow throughout their site, which can be a powerful indicator of how search engines are prioritizing different pages. For example, if a site has a very flat link flow distribution, it may be harder for search engines to determine the most important parts of the site and which parts to prioritize within a crawl budget.

To optimize crawl budget, users can use the Link Flow Distribution screen to identify areas of the site that are not receiving enough link flow and make adjustments accordingly.

For example, if a blog post is not receiving as much link flow as other pages on the site, users can add more internal links to that post to increase its visibility and make it more attractive to search engines. Additionally, users can also use the screen to identify pages that are receiving too much link flow, which may be causing search engines to spend too much time crawling those pages and not enough time crawling other parts of the site.

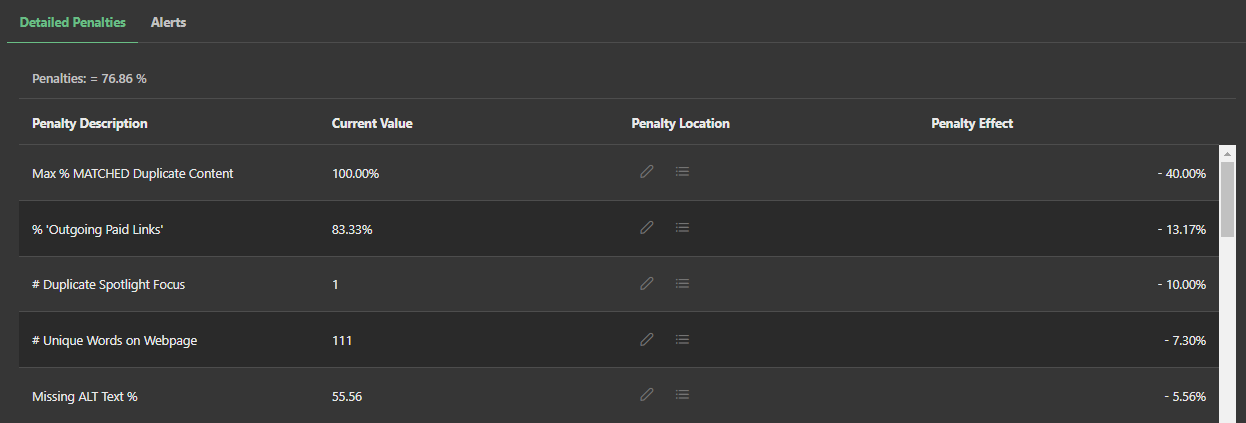

Another important feature of Market Brew's software is its ability to calculate things at a much higher granularity than traditional SEO tools.

This first principles approach means that its link graph is one of the most precise link graphs of any tool. This allows users to see the exact links that are affecting their site's crawl budget, which can be extremely useful when trying to identify and address any issues.

Overall, Market Brew's SEO testing platform is a powerful tool for optimizing crawl budget. By utilizing its search engine models, users can gain a deep understanding of what a search engine sees when it crawls their website, which allows them to make informed decisions about how to shape the link flow distribution of their site.

Additionally, the software's ability to calculate things at a much higher granularity than traditional SEO tools, and its powerful link analysis and site structure screens, make it an essential tool for any website owner looking to optimize their crawl budget and improve their search engine visibility.

Ready to Take Control of Your SEO?

See how Market Brew's predictive SEO models and expert team can unlock new opportunities for your site. Get tailored insights on how we can help your business rise above the competition.

Schedule a demonstration today via our Menu Button and Contact Form to discover how we engineer SEO success.

You may also like

How Filter Bubbles Affect SEO

Guides & Videos

SEO Text Generation Ultimate Guide

Guides & Videos

Others